Dynamic Reviewer is made over 3 solutions: HAST (IAST+RASP), DAST and APM.

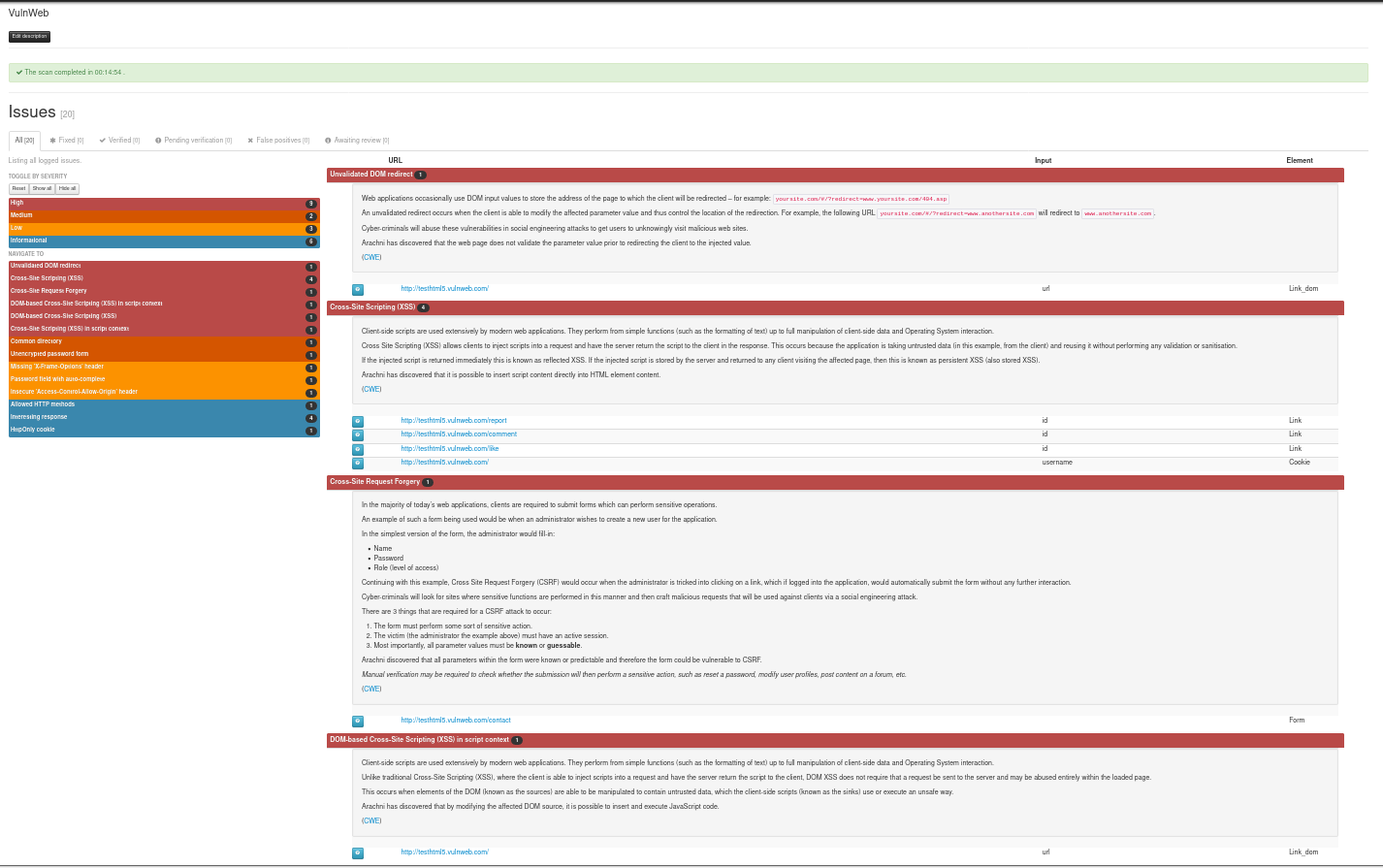

DAST-Penetration Testing made easy

With Dynamic Reviewer Light-PenTest module, Security Reviewer becomes an hybrid solution. You can inspect your web application during running, directly from Security Reviewer, from Eclipse or other IDE plugins like Visual Studio, or using a Browser.

The following installation options are available:

Desktop for Windows, Linux or Mac. It is based on a re-engineered version of IronWasp, but using our own Core Engine, and offers additional professional features for Pen Testers, like deep discovery, plugin development console and HTTP Requests manipulation.

Web App on premise. It is based on Arachni Web UI, but using our own Core Engine. With Arachni we share also the Dispatcher Infrastructure via Arachni-rpc protocol, and the results in AFR format.

Command Line Interface. It is our own multi-platform Core Engine, 100% written by us using Python and C++ and started by scratch in 2015, covering 200% more Security Checks than the market leaders

Cloud. Our Web App offered in an high-performance European or American Secured Cloud Infrastructure

Its special lightweight PenTest features, allowing to explore vulnerabilities in your Web Applications at the same time to keeping the software securely in your own hands, at your premises. No need of Backups before PenTest, we guarantee our tool will keep your system and database integrity.

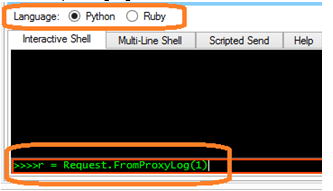

Dynamic Reviewer DAST plugins based architecture provides an VB.NET, Python and Ruby Scripting Environment with API (Desktop version), with full access to all functionality of the tool, for creating precise Crawlers and Scanners, used by the Pen Testers to write their own fuzzers, create custom crafted request, analysis of logs, etc.

Dynamic Reviewer DAST provides a robust and stable framework for Web Application Security Testing, suitable for all Security Analysts, QA and Developers with False Positives and False Negatives support. It is designed for optimum mix of Manual and Automated Testing and allows designing customised penetration tests, offering an easy-to-use GUI and advanced scripting capabilities.

Best Performances

Scan-times using traditional tools can range between a few hours to a couple of weeks – maybe even more. This means that wasted time can easily pile up, even when we’re talking about mere milliseconds per request/response.

Dynamic Reviewer benefits from great network performance due to its asynchronous HTTP request/response model. In this case – and from a high-level perspective –, asynchronous I/O means that you can schedule operations in such a way that they appear like they’re happening at the same time, which in turn means higher efficiency and better bandwidth utilization.

It provides a high-performance environment for the tests that need to be executed while making adding new tests very easy. Thus, you can rest assured that the scan will be as fast as possible and performance will only be limited by your or the audited server’s physical resources.

Avoiding useless technical details, the gist is the following:

Every type of resource usage has been massively reduced — CPU, RAM, bandwidth.

CPU intensive code has been rewritten and key parts of the system are now 2 to 55 times faster, depending on where you look.

The scheduling of all scan operations has been completely redesigned.

DOM operations have been massively optimized and require much less time and overall resources.

Suspension to disk is now near instant.

Previously browser jobs could not be dumped to disk and had to be completed, which could cause large delays depending on the amount of queued jobs.

Default configuration is much less aggressive, further reducing the amount of resource usage and web application stress.

Talk is cheap though, so let’s look as some numbers:

Duration | RAM | HTTP requests | HTTP requests/second | Browser jobs | Seconds per browser job | |

|---|---|---|---|---|---|---|

New engine | 00:02:14 | 150MB | 14,504 | 113.756 | 211 | 1.784 |

Old | 00:06:33 | 210MB | 34,109 | 101.851 | 524 | 3.88 |

Larger real production site (cannot disclose) | ||||||

|---|---|---|---|---|---|---|

Duration | RAM | HTTP requests | HTTP requests/second | Browser jobs | Seconds per browser job | |

New engine | 00:45:31 | 617MB | 60,024 | 47.415 | 9404 | 2.354 |

Old | 12:27:12 | 1,621MB | 123,399 | 59.516 | 9180 | 48.337 |

As you can see, the impact of the improvements becomes more substantial as the target’s complexity and size increases, especially when it comes to scan duration and RAM usage — and for the production site the new engine consistently yielded better coverage, which is why it performed more browser jobs.

End result:

Runs fast on under-powered machines.

You can run many more scans at the same time.

You can complete scans many times faster than before.

If you’re running scans in the “cloud”, it means that it’ll cost you many, many times less than before.

Machine Learning

The ML is what enables Dynamic Reviewer to learn from the scan it performs and incorporate that knowledge, on the fly, for the duration of the audit.

It uses various techniques to compensate for the widely heterogeneous environment of web applications. This includes a combination of widely deployed techniques (taint-analysis, fuzzing, differential analysis, timing/delay attacks) along with novel technologies (rDiff analysis, modular meta-analysis) developed specifically for the framework.

This allows the system to make highly informed decisions using a variety of different inputs; a process which diminishes false positives and even uses them to provide human-like insights into the inner workings of web applications.

Dynamic Reviewer is aware of which requests are more likely to uncover new elements or attack vectors and adapts itself accordingly.

Also, components have the ability to individually force the Core Engine to learn from the HTTP responses they are going to induce thus improving the chance of uncovering a hidden vector that would appear as a result of their probing.

Common Features

Cookie-jar/cookie-string support.

Custom header support.

SSL support with fine-grained options.

User Agent spoofing.

Proxy support for SOCKS4, SOCKS4A, SOCKS5, HTTP/1.1 and HTTP/1.0.

Proxy authentication.

Site authentication (SSL-based, form-based, Cookie-Jar, Basic-Digest, NTLMv1, Kerberos and others).

Automatic log-out detection and re-login during the scan (when the initial login was performed via the

autologin,login_scriptorproxyplugins).Custom 404 page detection.

UI abstraction:

Command-line Interface.

Web User Interface.

Pause/resume functionality.

Hibernation support -- Suspend to and restore from disk.

High performance asynchronous HTTP requests.

With adjustable concurrency.

With the ability to auto-detect server health and adjust its concurrency automatically.

Support for custom default input values, using pairs of patterns (to be matched against input names) and values to be used to fill in matching inputs.

Web Version Features

Client-Side scanning

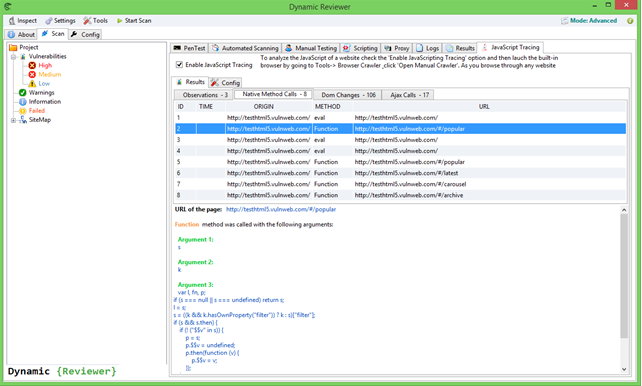

Dynamic Reviewer includes an integrated, real browser environment in order to provide sufficient coverage to modern web applications which make use of technologies such as HTML5, JavaScript, DOM manipulation, AJAX, etc.

In addition to the monitoring of the vanilla DOM and JavaScript environments, Dynamic Reviewer's browsers also hook into popular frameworks to make the logged data easier to digest for example JQuery or Angular apps.

In essence, this turns Dynamic Reviewer into a DOM and JavaScript debugger, allowing it to monitor DOM events and JavaScript data and execution flows. As a result, not only can the system trigger and identify DOM-based issues, but it will accompany them with a great deal of information regarding the state of the page at the time.

Relevant information include:

Page DOM, as HTML code.

With a list of DOM transitions required to restore the state of the page to the one at the time it was logged.

Original DOM (i.e. prior to the action that caused the page to be logged), as HTML code.

With a list of DOM transitions.

Data-flow sinks -- Each sink is a JS method which received a tainted argument.

Parent object of the method (ex.:

DOMWindow).Method signature (ex.:

decodeURIComponent()).Arguments list.

With the identified taint located recursively in the included objects.

Method source code.

JS stacktrace.

Execution flow sinks -- Each sink is a successfully executed JS payload, as injected by the security checks.

Includes a JS stacktrace.

JavaScript stack-traces include:

Method names.

Method locations.

Method source codes.

Argument lists.

Compatible with ES5 and ES6

Integrated with Wapplyzer

A bunch of frameworks are supported, like Cordova/Phonegap and Node.js

In essence, you have access to roughly the same information that your favorite debugger (for example, FireBug) would provide, as if you had set a breakpoint to take place at the right time for identifying an issue.

DOM Security Issues

The list of DOM Security Issues found by Dynamic Reviewer are:

# | Issue | Type | Category |

1 | Code Injection - Client Side | Error | Code Execution |

2 | Code Injection - PHP input wrapper | Error | Code Execution |

3 | Code injection - Timing | Error | Code Execution |

4 | File Inclusion - Client Side | Error | Code Execution |

5 | OS Command Injection - Client Side | Error | Code Execution |

6 | OS Command Injection - Timing | Error | Code Execution |

7 | Remote File Inclusion Client Side | Error | Code Execution |

8 | Session Fixation | Error | Code Execution |

9 | XSS - DOM | Error | Code Execution |

10 | XSS - DOM - Script Context | Error | Code Execution |

11 | XSS - Event | Error | Code Execution |

12 | Data from attacker controllable navigation based DOM properties is executed as HTML | Error | Code Execution |

13 | Data from attacker controllable navigation based DOM properties is executed as JavaScript | Error | Code Execution |

14 | Data from attacker controllable URL based DOM properties is executed as HTML | Error | Code Execution |

15 | Data from attacker controllable URL based DOM properties is executed as JavaScript | Error | Code Execution |

16 | Non-HTML format Data from DOM storage is executed as HTML | Warning | Code Execution |

17 | Non-JavaScript format Data from DOM storage is executed as JavaScript | Warning | Code Execution |

18 | HTML format Data from DOM storage is executed as HTML | Info | Code Execution |

19 | JavaScript format Data from DOM storage is executed as JavaScript | Info | Code Execution |

20 | Data from user input is executed as HTML | Warning | Code Execution |

21 | Data from user input is executed as JavaScript | Warning | Code Execution |

22 | Non-HTML format Data taken from external site(s) (via Ajax, WebSocket or Cross-Window Messages) is executed as HTML | Error | Code Execution |

23 | Non-JavaScript format Data taken from external site(s) (via Ajax, WebSocket or Cross-Window Messages) is executed as JavaScript | Error | Code Execution |

24 | HTML format Data taken from external site(s) (via Ajax, WebSocket or Cross-Window Messages) is executed as HTML | Warning | Code Execution |

25 | JavaScript format Data taken from external site(s) (via Ajax, WebSocket or Cross-Window Messages) is executed as JavaScript | Warning | Code Execution |

26 | Non-HTML format Data taken from across sub-domain (via Ajax, WebSocket or Cross-Window Messages) is executed as HTML | Warning | Code Execution |

27 | Non-JavaScript format Data taken from across sub-domain (via Ajax, WebSocket or Cross-Window Messages) is executed as JavaScript | Warning | Code Execution |

28 | HTML format Data taken from across sub-domain (via Ajax, WebSocket or Cross-Window Messages) is executed as HTML | Info | Code Execution |

29 | JavaScript format Data taken from across sub-domain (via Ajax, WebSocket or Cross-Window Messages) is executed as JavaScript | Info | Code Execution |

30 | Non-HTML format Data taken from same domain (via Ajax, WebSocket or Cross-Window Messages) is executed as HTML | Warning | Code Execution |

31 | Non-JavaScript format Data taken from same domain (via Ajax, WebSocket or Cross-Window Messages) is executed as JavaScript | Warning | Code Execution |

32 | HTML format Data taken from same domain (via Ajax, WebSocket or Cross-Window Messages) is executed as HTML | Info | Code Execution |

33 | JavaScript format Data taken from same domain (via Ajax, WebSocket or Cross-Window Messages) is executed as JavaScript | Info | Code Execution |

34 | Weak Hashing algorithms are used | Error | Cryptography |

35 | Weak Encryption algorithms are used | Error | Cryptography |

36 | Weak Decryption algorithms are used | Error | Cryptography |

37 | Cryptographic Hashing Operations were made | Info | Cryptography |

38 | Encryption operations were made | Info | Cryptography |

39 | Decryption operations were made | Info | Cryptography |

40 | Potentially Sensitive Data is leaked (via HTTP, Ajax, WebSocket or Cross-Window Messages) | Error | Data Leakage |

41 | Potentially Sensitive Data is leaked through Referrer Headers | Error | Data Leakage |

42 | Data is leaked through HTTP | Warning | Data Leakage |

43 | Data is leaked through WebSocket | Warning | Data Leakage |

44 | Data is leaked through Cross-Window Messages | Warning | Data Leakage |

45 | Data is leaked through Referrer Headers | Warning | Data Leakage |

46 | Potentially Sensitive Data is stored on Client-side Storage (in LocalStorage, SessionStorage, Cookies or IndexedDB) | Warning | Data Storage |

47 | Data is stored on Client-side Storage (in LocalStorage, SessionStorage, Cookies or IndexedDB) | Info | Data Storage |

48 | Cross-window Messages are sent insecurely | Error | Communication |

49 | Cross-site communications are made | Warning | Communication |

50 | Communications across sub-domains are made | Warning | Communication |

51 | Same Origin communications are made | Info | Communication |

52 | JavaScript code is loaded from Cross-site Sources | Warning | JS Code |

53 | JavaScript code is loaded from across sub-domains | Info | JS Code |

54 | JavaScript code is loaded from Same Origin | Info | JS Code |

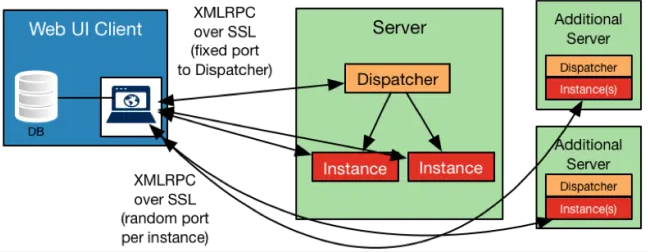

Browser Cluster

The browser-cluster is what coordinates the browser analysis of resources (automated Web UI Clients) and allows the system to perform operations which would normally be quite time consuming in a high-performance fashion.

It provides the single point of contact that will contact its grid of Dispatch Servers (Dispatchers) with initial scan requests. The Dispatchers then spawn a new Instance on that Server. At this point, the Web UI Clients will be able to communicate directly with the spawned Instance to configure it for the appropriate scanning jobs. When an Instance is done with its task, The Web UI Clients pull the data and store it, while the Instance simply goes away, returning the resources it consumed to the operating system for future Instances or other software tasks altogether. As we move into production testing, we can scale the deployment to span additional resources on other servers in our grid, and this will not only allow us to run multiple scans, but accommodate teams of users while consolidating the data gathered in a central database on the Web UI Client.

Configuration options include:

Adjustable pool-size, i.e. the amount of browser workers to utilize.

Timeout for each job.

Worker TTL counted in jobs -- Workers which exceed the TTL have their browser process re-spawned.

Ability to disable loading images.

Adjustable screen width and height.

Can be used to analyze responsive and mobile applications.

Ability to wait until certain elements appear in the page.

Configurable local storage data.

Coverage

The system can provide great coverage to modern web applications due to its integrated browser environment. This allows it to interact with complex applications that make heavy use of client-side code (like JavaScript) just like a human would.

In addition to that, it also knows about which browser state changes the application has been programmed to handle and is able to trigger them programmatically in order to provide coverage for a full set of possible scenarios.

By inspecting all possible pages and their states (when using client-side code) Dynamic Reviewer is able to extract and audit the following elements and their inputs:

Forms

Along with ones that require interaction via a real browser due to DOM events.

User-interface Forms

Input and button groups which don't belong to an HTML

<form>element but are instead associated via JS code.

User-interface Inputs

Orphan

<input>elements with associated DOM events.

Links

Along with ones that have client-side parameters in their fragment, i.e.:

http://example.com/#/?param=val¶m2=val2With support for rewrite rules.

Link Templates -- Allowing for extraction of arbitrary inputs from generic paths, based on user-supplied templates -- useful when rewrite rules are not available.

Along with ones that have client-side parameters in their URL fragments, i.e.:

http://example.com/#/param/val/param2/val2

Cookies

Headers

Generic client-side elements which have associated DOM events.

AJAX-request parameters.

JSON request data.

XML request data.

Distributed architecture

Dynamic Reviewer is designed to fit into your workflow and easily integrate with your existing infrastructure.

Depending on the level of control you require over the process, you can either choose the REST service or the custom RPC protocol.

Both approaches allow you to:

Remotely monitor and manage scans.

Perform multiple scans at the same time -- Each scan is compartmentalized to its own OS process to take advantage of:

Multi-core/SMP architectures.

OS-level scheduling/restrictions.

Sandboxed failure propagation.

Communicate over a secure channel.

REST API

Very simple and straightforward API.

Easy interoperability with non-Ruby systems.

Operates over HTTP.

Uses JSON to format messages.

Stateful scan monitoring.

Unique sessions automatically only receive updates when polling for progress, rather than full data.

RPC API

High-performance/low-bandwidth communication protocol.

MessagePackserialization for performance, efficiency and ease of integration with 3rd party systems.

Grid:

Self-healing.

Scale up/down by hot-plugging/hot-unplugging nodes.

Can scale up infinitely by adding nodes to increase scan capacity.

(Always-on)Load-balancing -- All Instances are automatically provided by the least burdened Grid member.

With optional per-scan opt-out/override.

(Optional)High-Performance mode -- Combines the resources of multiple nodes to perform multi-Instance scans.

Enabled on a per-scan basis.

Scope configuration

Filters for redundant pages like galleries, catalogs, etc. based on regular expressions and counters.

Can optionally detect and ignore redundant pages automatically.

URL exclusion filters using regular expressions.

Page exclusion filters based on content, using regular expressions.

URL inclusion filters using regular expressions.

Can be forced to only follow HTTPS paths and not downgrade to HTTP.

Can optionally follow subdomains.

Adjustable page count limit.

Adjustable redirect limit.

Adjustable directory depth limit.

Adjustable DOM depth limit.

Adjustment using URL-rewrite rules.

Can read paths from multiple user supplied files (to both restrict and extend the scope).

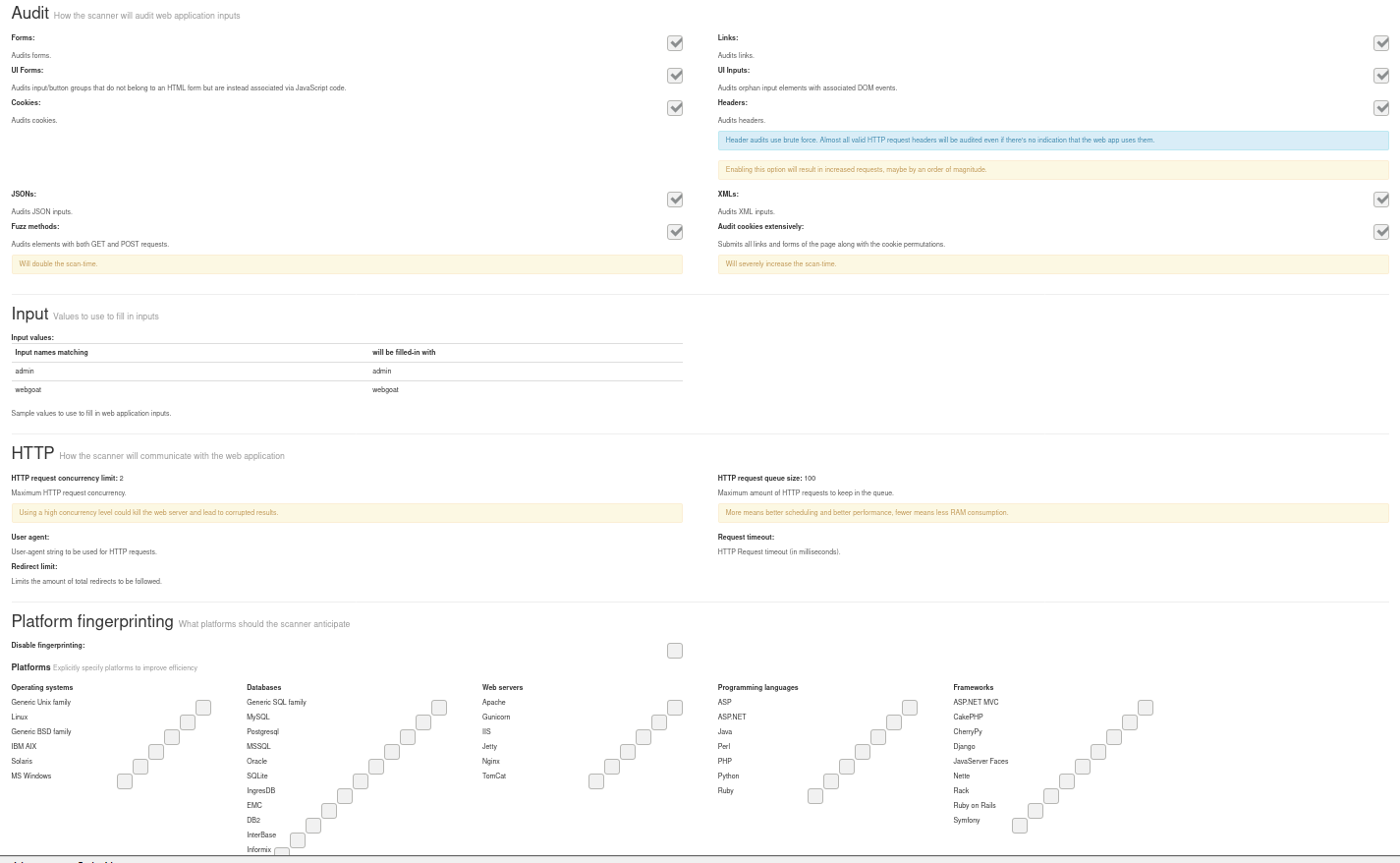

Audit

Can audit:

Forms

Can automatically refresh nonce tokens.

Can submit them via the integrated browser environment.

User-interface Forms

Input and button groups which don't belong to an HTML

<form>element but are instead associated via JS code.

User-interface Inputs

Orphan

<input>elements with associated DOM events.

Links

Can load them via the integrated browser environment.

Link Templates

Can load them via the integrated browser environment.

Cookies

Can load them via the integrated browser environment.

Headers

Generic client-side DOM elements.

JSON request data.

XML request data.

Can ignore binary/non-text pages.

Can audit elements using both

GETandPOSTHTTP methods.Can inject both raw and HTTP encoded payloads.

Can submit all links and forms of the page along with the cookie permutations to provide extensive cookie-audit coverage.

Can exclude specific input vectors by name.

Can include specific input vectors by name.

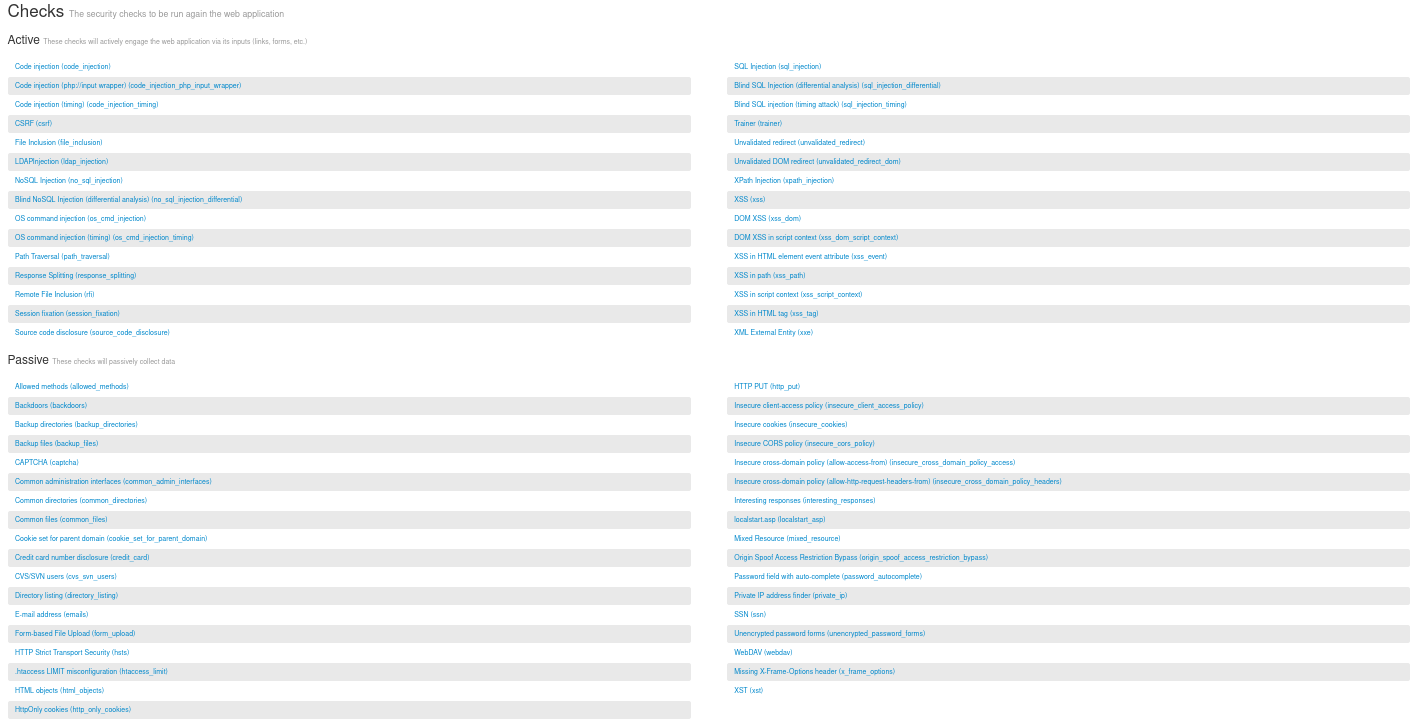

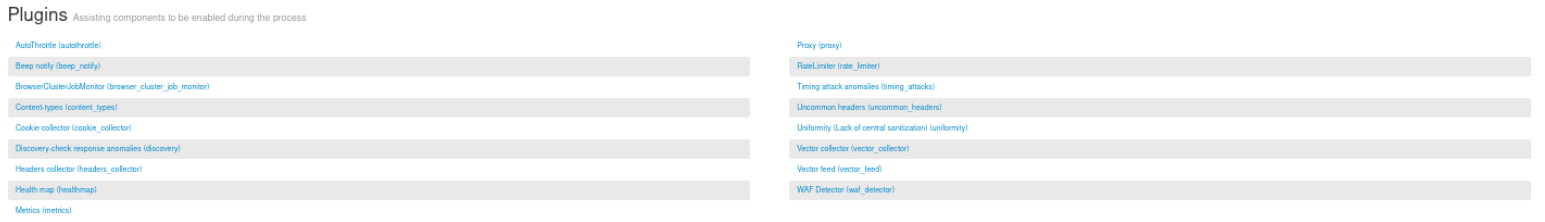

Components

Dynamic Reviewer is a highly modular system, employing several components of distinct types to perform its duties.

In addition to enabling or disabling the bundled components so as to adjust the system's behavior and features as needed, functionality can be extended via the addition of user-created components to suit almost every need.

Platforms

In order to make efficient use of the available bandwidth, Dynamic Reviewer performs a platform fingerprinting integrating with Webapplyzer and tailors the audit process to the server-side deployed technologies by only using applicable payloads.

The user also has the option of specifying extra platforms (like a DB server) in order to help the system be as efficient as possible. Alternatively, Platform fingerprinting can be disabled altogether.

Finally, Dynamic Reviewer will always apply on the side of caution and send all available payloads when it fails to identify specific platforms.

Unique Desktop Features

Our Desktop solution has the following features:

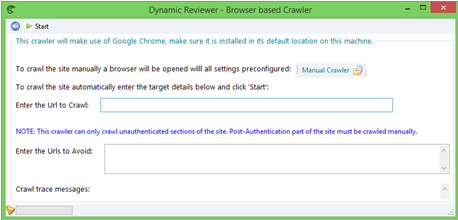

Built-in Crawler, Scan Manager and Proxy

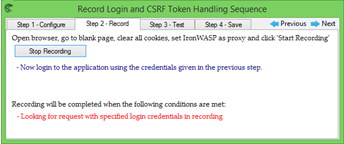

Login Sequence Recording

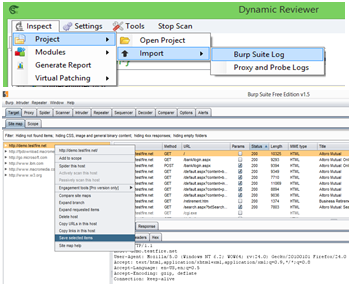

C#, VB.NET, Python and Ruby based Plugins, compatible with Burp Suite, Acunetix, TrustWare (formerly Cenzic) scripts and IronWASP API. Comes bundled with a growing number of Modules built by researchers in the security community

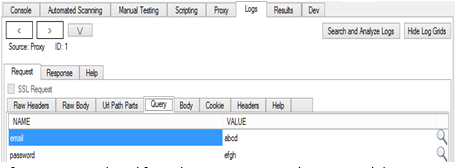

Burp suite Logs import. It can analyse the HTTP logs for Access Control and other checks

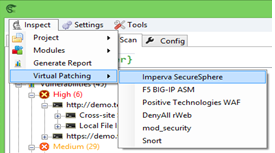

Virtual Patching to WAFs, like Imperva, F5, Positive Technologies, Snort, ModSecurity, DenyAll rWeb

Active plugins for Scanning, performing scans against the target to identify vulnerabilities, with fine-grained scanning support. Can be executed only when the user explicitly calls them. (e.g. Cross-Site Scripting, SQL Injection, Code Injection, Parameter Manipulation)

Passive plugins for Vulnerability detection, through analysis of all traffic going through the tool (e.g. passwords sent over clear-text and Insecure cookies)

Format plugins for defining data formats, to deal with various data formats in Request body, like JSON, XML, Java Serialized objects, AMF, WCF, GWT, Multi-part POST or custom data formats

Session plugins to customise the scans. Unique feature that can understand slight variations in Authentication, Session handling, CSRF protections and Log-flows/Multi-step forms

JavaScript Static Analysis Engine. It performs Taint Analysis for DOM-based XSS. Identifies Source and Sink objects and can trace them through the code

Exploits testing (integrated with Security Reviewer)

Dynamic Reviewer DAST automatically Import Security Reviewer's Static Analysis the analyzed Web Application's directory and file lists, Attack Vectors and Exploits.

HTML5 discovery. It performs JavaScript based port scans, stealth AppCache attacks and HTML5 botnets

Browser Phishing Framework. It perform multiple browser phishing attacks against the browser, database stealing and backdoor placement

XSS Reverse Shell. Session hijacking by tunnelling HTTP over HTTP using HTML5 Cross Origin Requests

SSL-TLS Security checker. Scanner to discover vulnerabilities in SSL and TLS installations

SAP Testing. It can detect Hostnames, SAPSID and System Number from SAP Error messages. It can find all ICF Public and Private Services (like Info Service, SOAP RFC) and Web Services (like SAP Start Service) that respond to a Request 200 OK. If login is Basic Authentication, it launches a Brute Force Attack and Verb Tampering. It automatically checks all HTTP(S) ports and finds interesting pages, including Admin pages and, using WAM, it would try to login to the system with different users in REMOTE_USER header

SCADA Testing. It provides Vulnerability detection capabilities for multiple SCADA Master Systems

WiFi Testing. WiFi Routers Vulnerability Scanner

Team Reviewer, ThreadFix and OWASP Defect Dojo integration modules. Vulnerabilities found will be correlated to third party scanners

GHDB-ExploitDB. Google Hacking DB integrated in Exploit-DB is used to check if the web application has a publicly available exploit

Deep Discovery. Automatic Discovery and Exploit of main DBMS, AS, CMS, DMS, Wiki and e-Commerce platforms, like: Wordpress, Drupal, ccPortal, WebGui, Rubedo, EzPublish, MODX, Concrete, X3, PHPNuke, DotNetNuke, Orchad, Joomla, Umbraco, Seed, Magento, OpenCart, WebSphere Commerce, WebSphere Portal, MediaWiki, Movable Type, phpLiteAdmin, TWiki, SharePoint, Documentum, FileNet, OWS, BI Publisher, Splunk, LiteSpeed, PeopleSoft, Siebel, Alfresco, Nuxeo, iPlanet, GlassFish, phpBB, Magnolia, ZenCart, Tableau, ZenCart, Hesk, IBM Tivoli EP and WebSphere AS. Fingerprint the web server type and version. Report old versions of server software if it contains known vulnerabilities. Discover web server configuration problems (directory listing, backup files, old configuration files, etc.). Identify specific web applications that are running on the web server. Check for known application vulnerabilities

DISCLAIMER: Due we make use of opensource third-party components, we do not sell the product, but we offer a yearly subscription-based Commercial Support to selected Customers.

COPYRIGHT (C) 2014-2021 SECURITY REVIEWER SRL. ALL RIGHTS RESERVED.