Team Reviewer provides an effective vulnerability discovery, management & tracking, by continuously identifying threats, monitoring changes in your network, discovering and mapping all your devices and software — including new, unauthorized and forgotten ones —, and reviewing configuration details for each asset.

Team Reviewer is a safety plan in addition to vulnerability management tool. It allows y'all to deal your application safety program, hold production in addition to application information, schedule scans, triage vulnerabilities in addition to force findings into defect trackers. Consolidate your findings into source of truth amongst Team Reviewer.

Central Repository

Team Reviewer has the ability to maintain its own repository of internally managed vulnerabilities (findings). The private repository behaves identical to other sources of vulnerability intelligence such as the OSS Index, VulnDB, NVD, etc.

This repository can be stored to MySQL, MariaDB, PostGres or Oracle (RAC included) DMBS, or to a Managed database service (AWS RDS).

There are three primary use cases for the private vulnerability repository.

Organizations that wish to track vulnerabilities in internally-developed components shared among various software projects in the organization.

Organizations performing security research that have a need to document said research before optionally disclosing it.

Organizations that are using unmanaged sources of data to identify vulnerabilities. This includes:

Change logs

Commit logs

Issue trackers

Social media posts

Vulnerabilities tracked in the private vulnerability repository have a source of ‘INTERNAL’. Like all vulnerabilities in the system, a unique VulnID is required to help uniquely identify each one. It’s recommended that organizations follow patterns to help identify the source. For example, vulnerabilities in the NVD all start with ‘CVE-‘. Likewise an organization tracking their own may opt to use something like ‘ACME-‘ or ‘INT-‘ or use multiple qualifiers depending on the type of vulnerability. The only requirement is that the VulnID is unique to the INTERNAL source.

Web App

Team Reviewer provides a unified interface for accessing all our tools, part of Security Reviewer Suite:

Static Reviewer

Dynamic Reviewer

SCA Reviewer (Software Composition Analysis)

Firmware Reviewer

Mobile Reviewer

Team Reviewer provides Scalability through NGINX/UWSGI and Django as native application.

See Team Reviewer’s Integration Checklist.

Enhanced Features

Team Reviewer is 100% Web GUI app, based on OWASP Defect Dojo with a lot of enhancements:

Multi-language Kit is available for localization.

Direct execution of all features provided by Security Reviewer Suite (SAST, DAST, SCA, Mobile, Firmware)

Extended Workflow and Reporting features, GDPR Compliance Level included

Performant database, based on MariaDB 10.x Galera cluster. It can be changed to Oracle RAC 12 or any other Supported Relational Database

Secured Source code and Operation platform, due to an accurate Static Code Review and Dynamic Analysis made by Security Reviewer and Dynamic Reviewer tools

Encryption of DB Tables containing sensitive data (Users, Groups, Applications, Workflow, Policies, etc.)

TEnhanced support for third-party SAST, IAST, DAST and Netowrk Scans tools.

Mobile Behaviorial Analysis integration (Mobile Reviewer)

Software Composition Analysis (SRA) integration

Software Resilience Analysis (SCA) Integration

Firmware Reviewer Single Sign On

SQALE, OWASP Top Ten 2017, Mobile Top Ten 2016, CWE, CVE, WASC, CVSSv2, CVSSv3.1 and PCI-DSS 3.2.1 Compliance

Application Portfolio Management tools integration

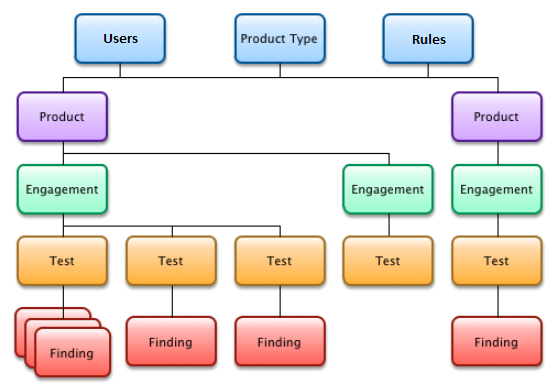

Models

Team Reviewer attempts to simplify how users interact with the system by minimizing the number of objects it defines. The definition for each as well as sample usages is below.

Products

Any application, project, program, or product that you are currently analyzing.

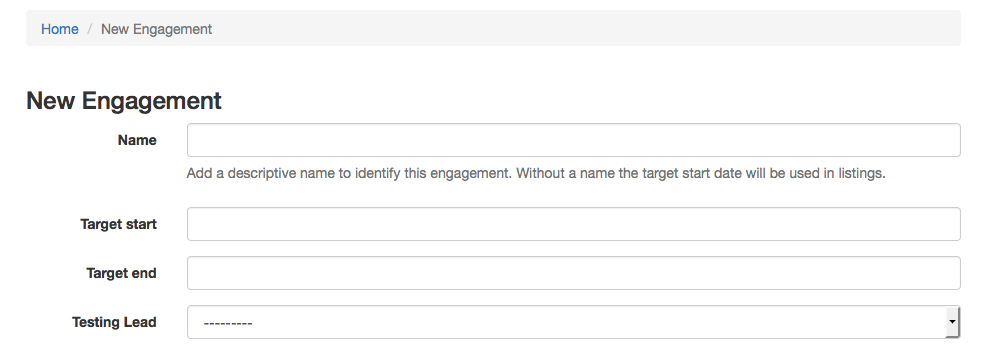

Engagement

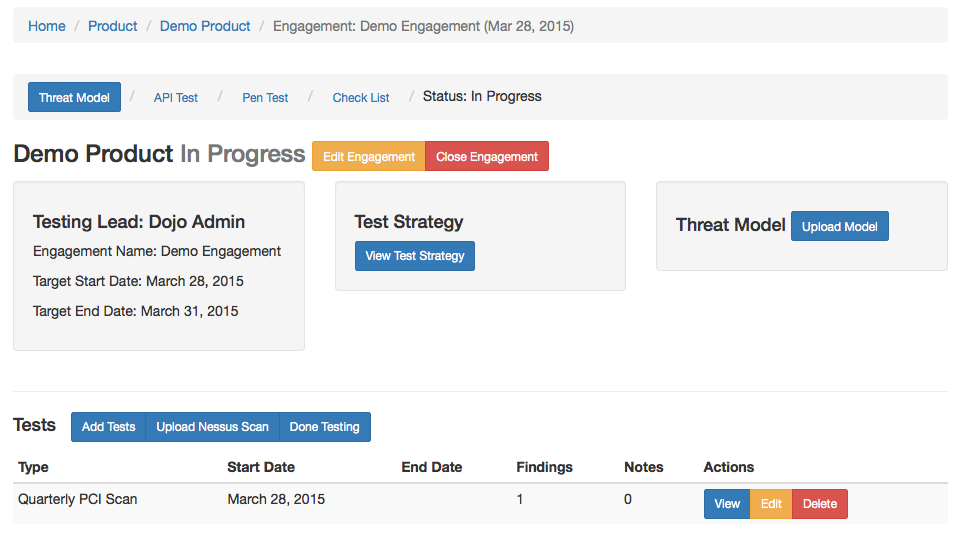

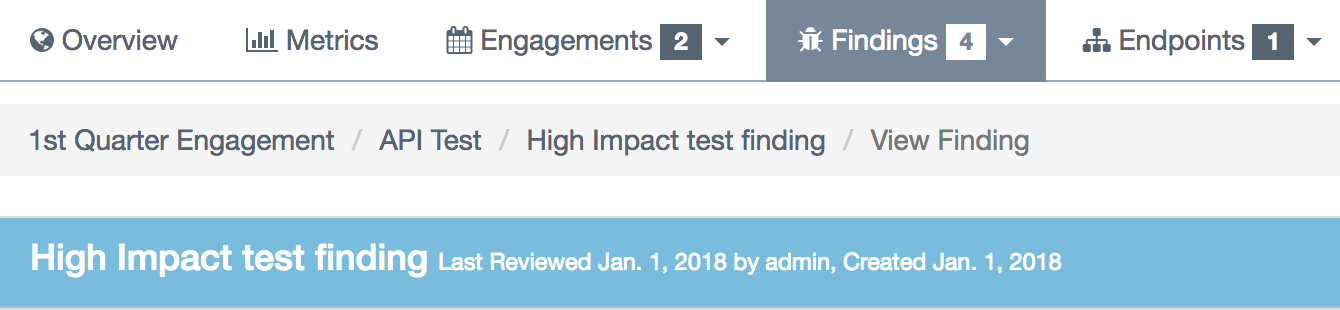

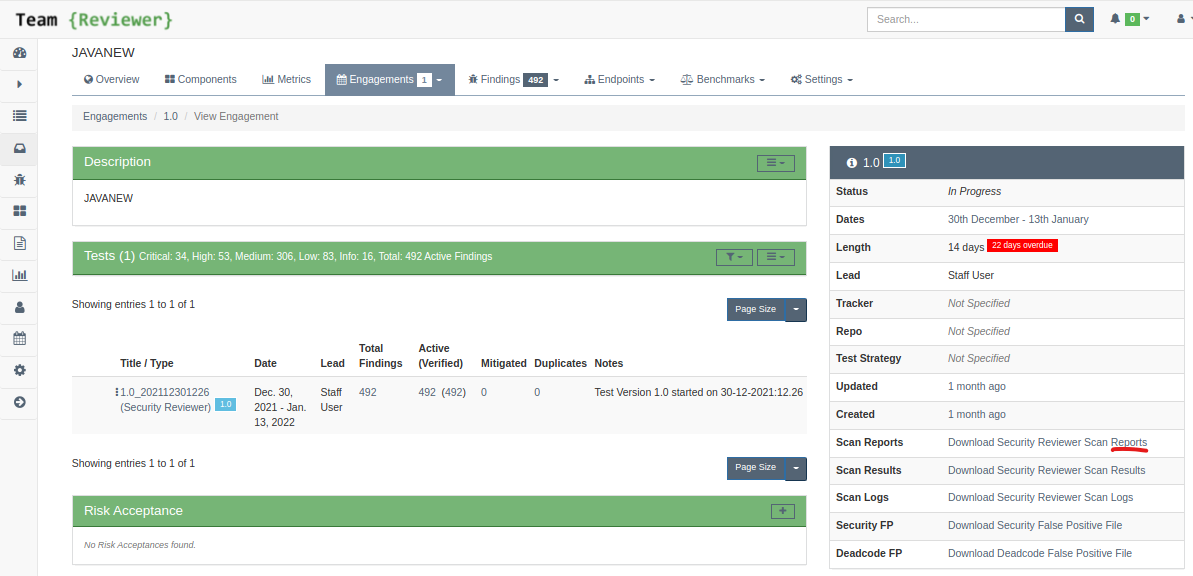

Engagements are moments in time when testing is taking place, aka as Audit. They are associated with a name for easy reference, a time line, a lead (the user account of the main person conducting the testing), a test strategy, and a status.

Engagement consists of two types: Interactive and CI/CD.

An interactive engagement is typically an engagement conducted by an engineer, where findings are usually uploaded by the engineer.

A CI/CD engagement, as it’s name suggests, is for automated integration with a CI/CD pipeline

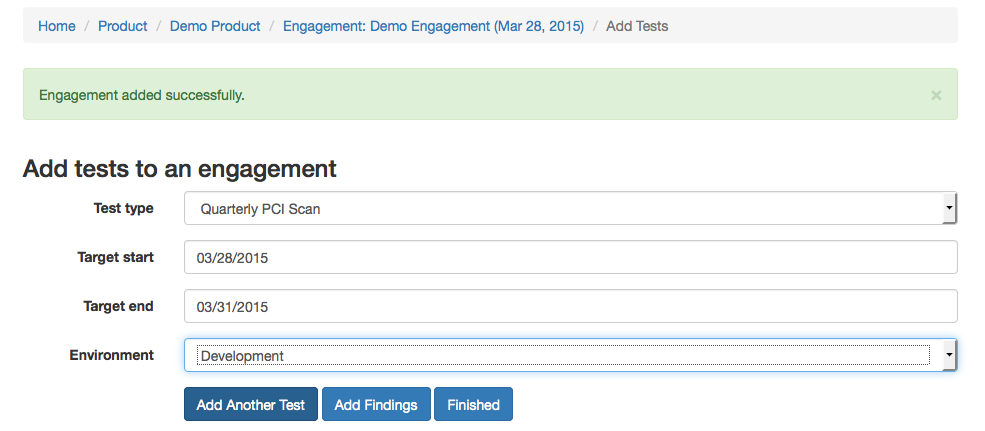

Each Engagement can include several Tests.

You can view the Test Strategy or Threat Model, modify the Engagement dates, view Tests and Findings, add Risk Acceptance, complete the security Check List, or close the Engagement.

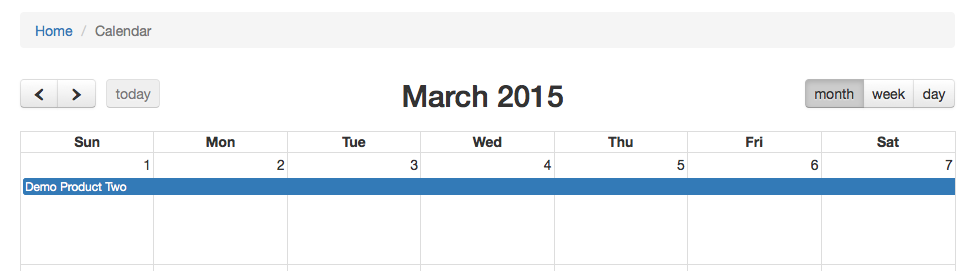

Engagements are linked to a time line in the Calendar.

Engagement Survey

Engagement Survey extends Engagement records by incorporating survey(s) associated with each engagement to help develop a test strategy.

The default questions within these surveys have been created by the Rackspace Security Engineering team to help identify the attack vectors and risks associated with the product being assessed.

GDPR, Static Analysis and Dynamic Analysis cutomizable Surveys are also available.

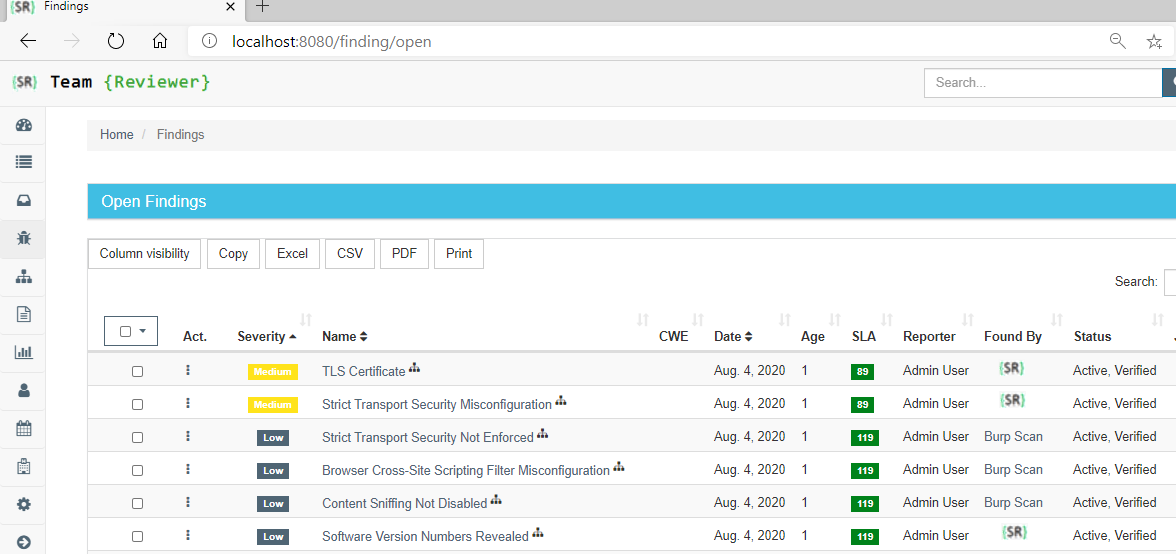

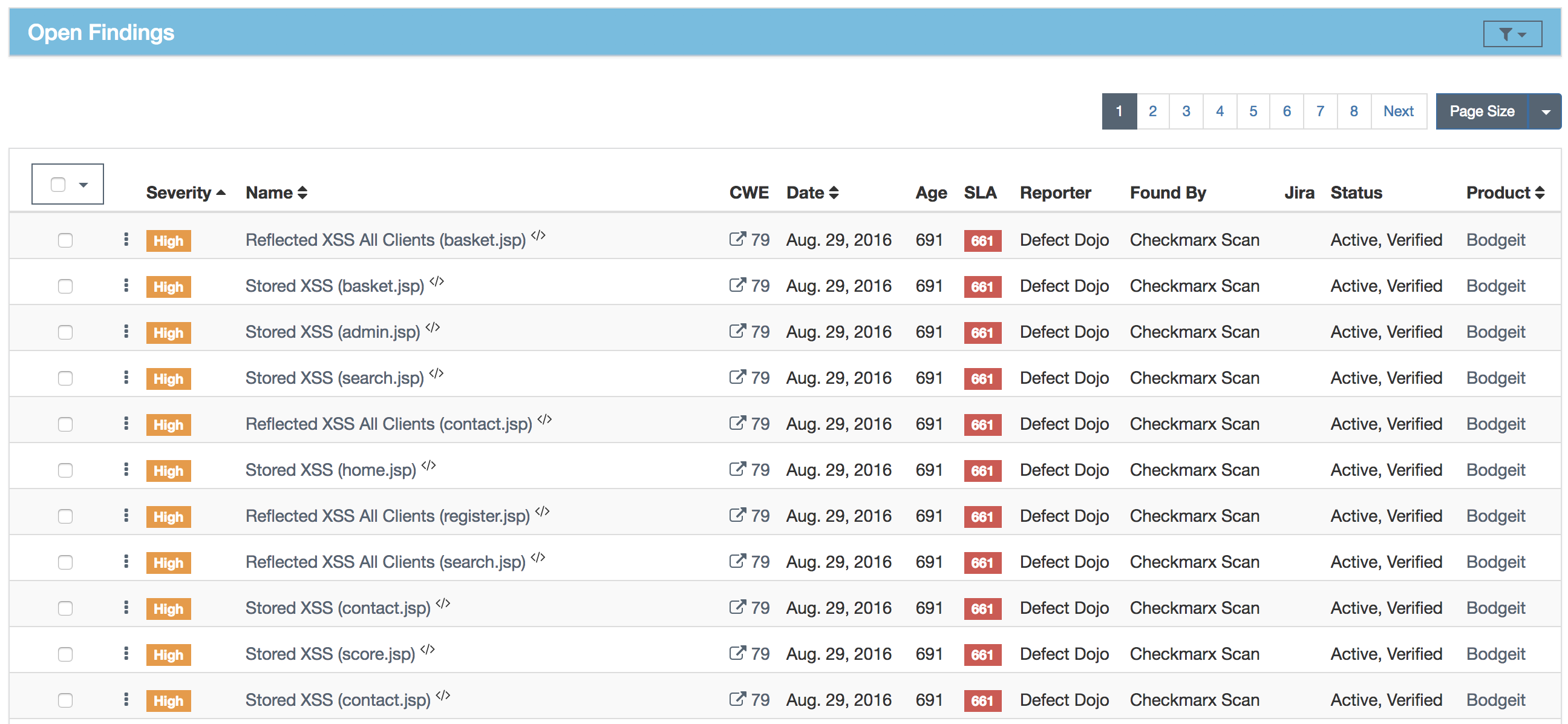

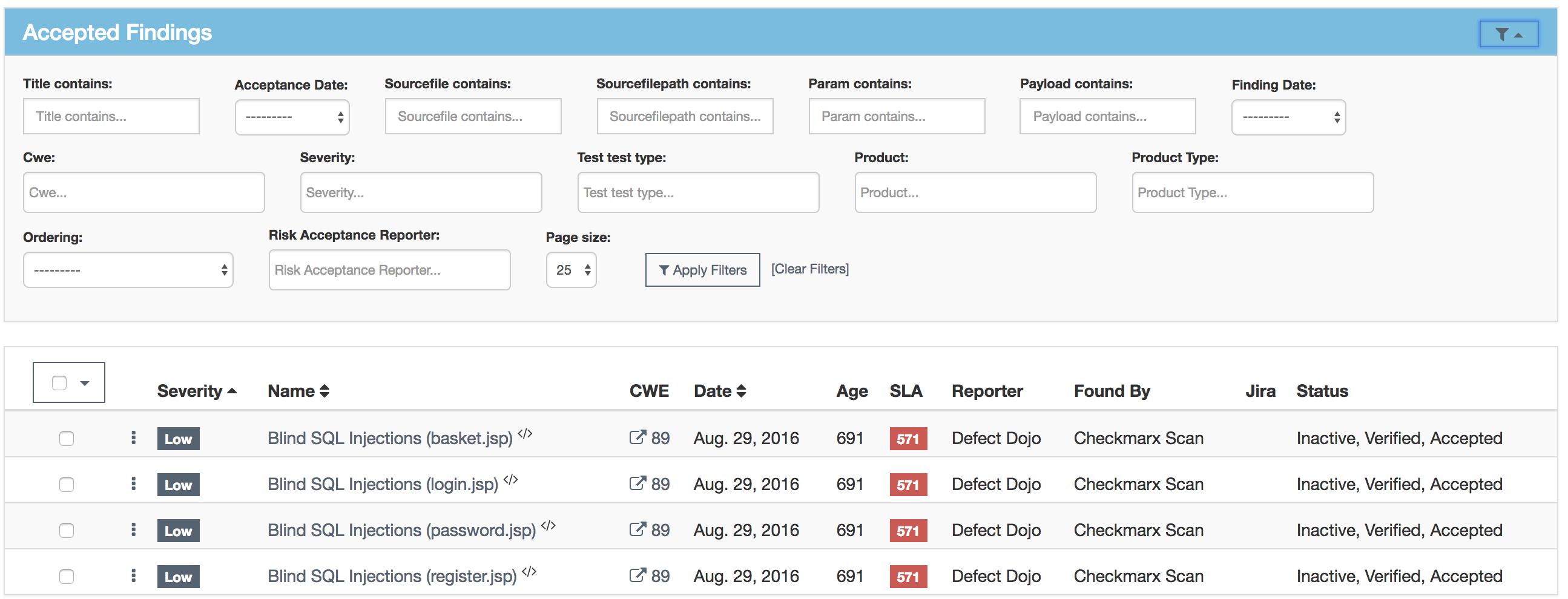

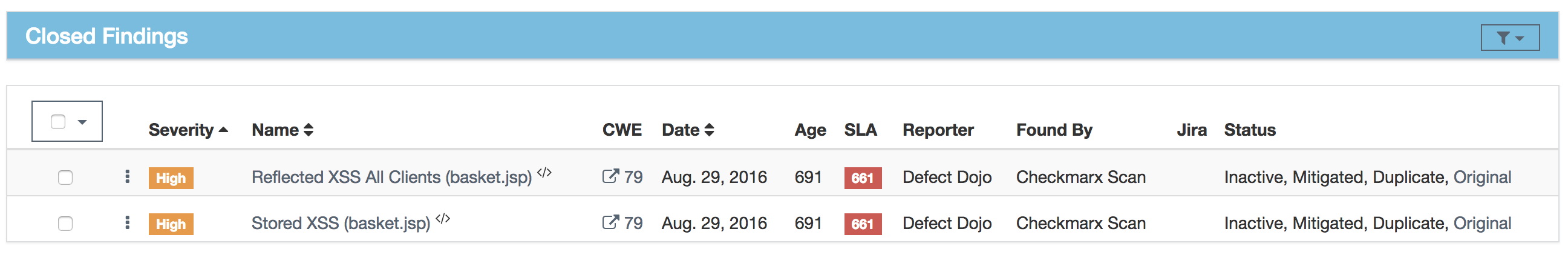

Findings

A finding represents a flaw discovered while testing. It can be categorized with severities of Critical, High, Medium, Low, and Informational (Info).

Each Finding get a Unique ID and a Status.

Findings are the defects or interesting things that you want to keep track of when testing a Product during a Test/Engagement. Here, you can lay out the details of what went wrong, where you found it, what the impact is, and your proposed steps for mitigation.

You can Force, if authorized, the Status, Severity, Risk Level. This operation will be tracked in a special log and can be viewed by authorized users.

You can Filter by: ID, Application, Severity, Finding Name, Date range, SLA, Auditor (Reporter, Found By), Status, Risk Level, N. of Vulnerabilities.

You can also Reference CWEs, or add links to your own references. (External Ddocumentation Links included).

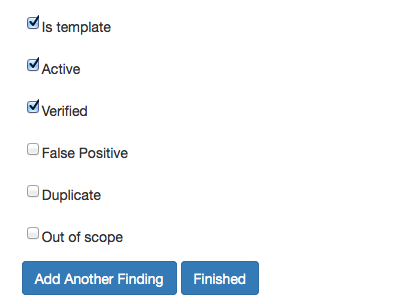

Templating findings allows you to create a version of a finding descriptor that you can then re-use over and over again, on any Engagement.

False Positive and Duplicates

Templates can be used across all Engagements. Define what kind of Finding this is. Is it a false positive? A duplicate? If you want to save this finding as a template, check the “Is template” box.

Findings cannot always be remediated or addressed for various reasons.

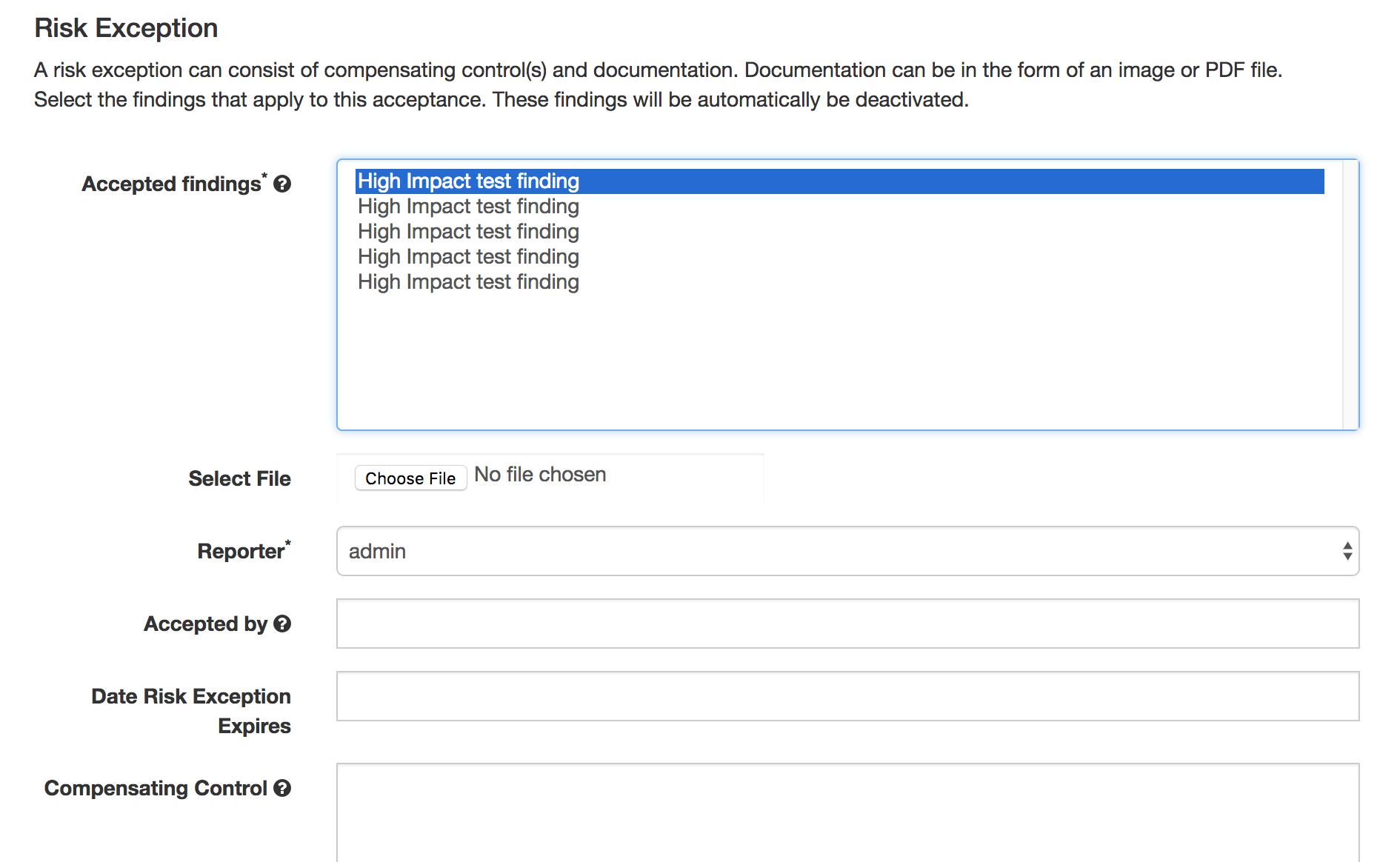

A Finding Status can change to accepted by doing the following. Findings are accepted in the engagement view.

You fill in the details to support the risk acceptance.

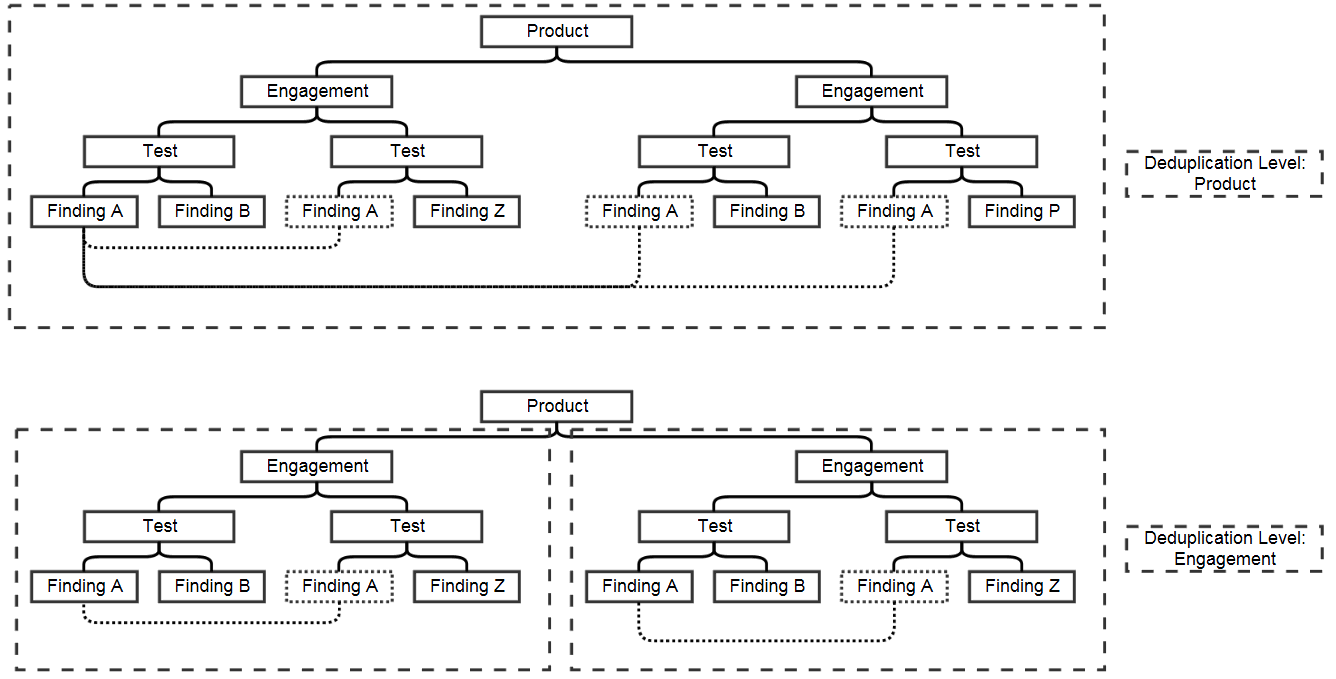

De-duplication is a feature that when enabled will compare findings to automatically identify duplicates.

To enable de-duplication go to System Settings and check Deduplicate findings.

Team Reviewer deduplicates findings by comparing endpoints, CWE fields, and titles. If a two findings share a URL and have the same CWE or title,

Team Reviewer marks the less recent finding as a duplicate. When de-duplication is enabled, a list of deduplicated findings is added to the engagement view.

The following image illustrates the option deduplication on engagement and deduplication on product level:

Visual representation:

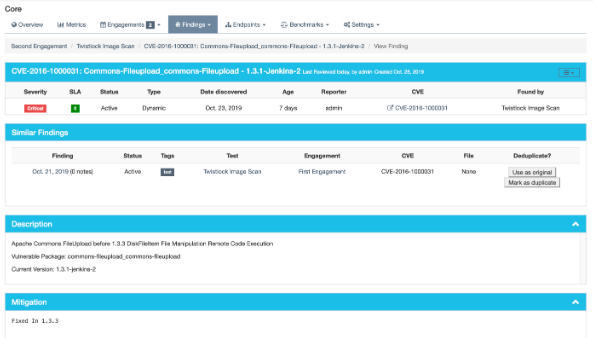

While viewing a finding, similar findings within the same product are listed along with buttons to mark one finding a duplicate of the other.

Clicking the “Use as original” button on a similar finding will mark that finding as the original while marking the viewed finding as a duplicate.

Clicking the “Mark as duplicate” button on a similar finding will mark that finding as a duplicate of the viewed finding.

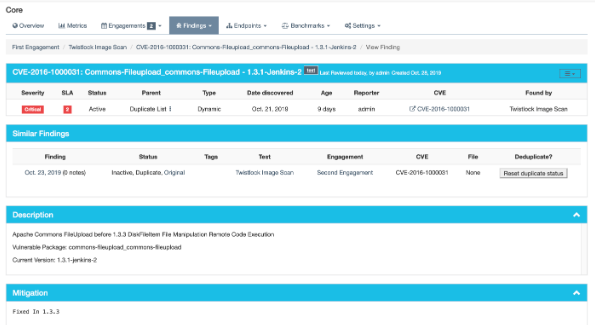

If a similar finding is already marked as a duplicate, then a “Reset duplicate status” button is shown instead which will remove the duplicate status on that finding along with marking it active again.

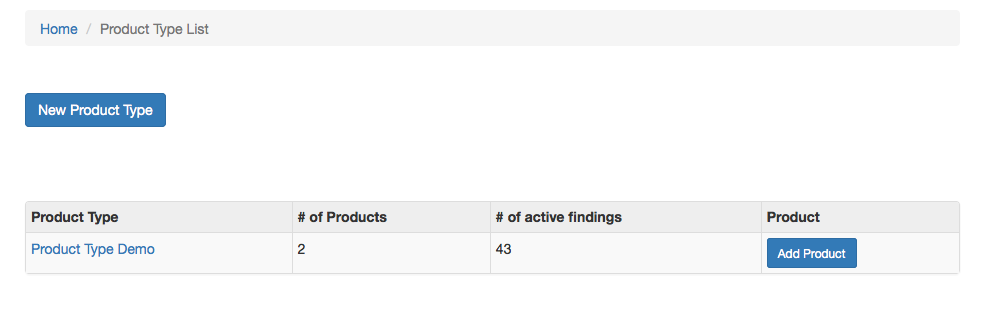

Product Types

Product types represent the top level model, these can be business unit divisions, different offices or locations, development teams, or any other logical way of distinguishing “types” of products.

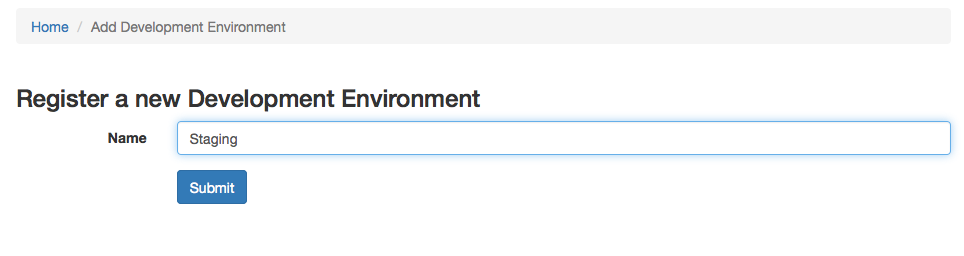

Environments

These describe the environment that was tested in a particular Test.

Examples

Production

Staging

Stable

Development

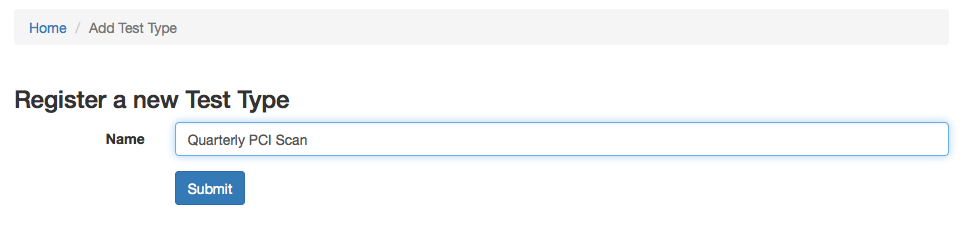

Test Types

These can be any sort of distinguishing characteristic about the type of testing that was done in an Engagement.

Examples

Functional

Security

Nessus Scan

API test

Static Analysis

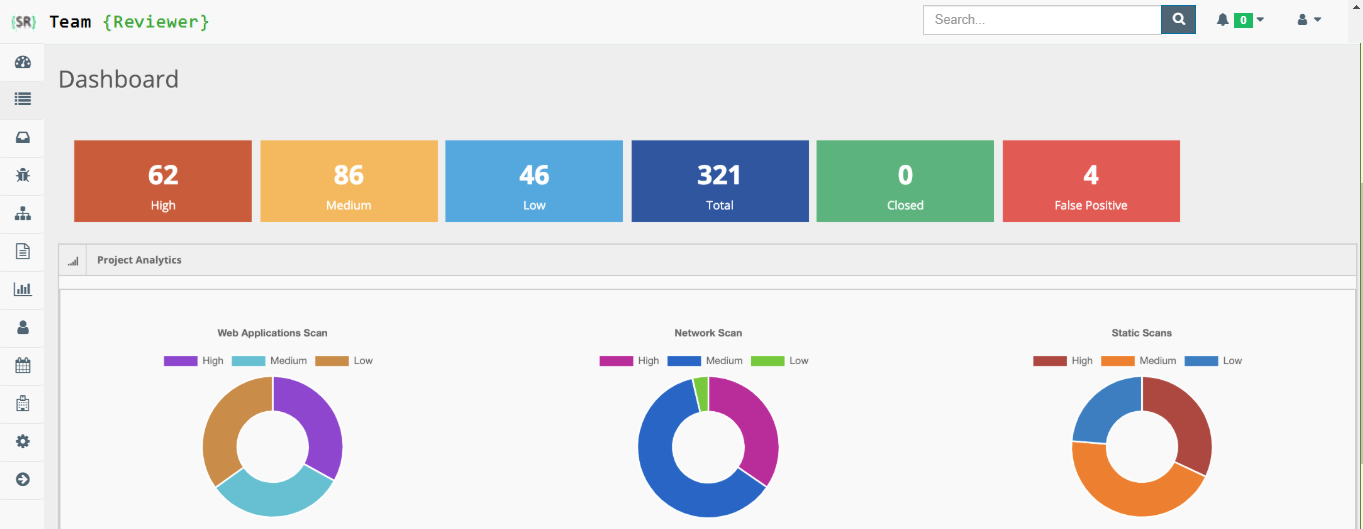

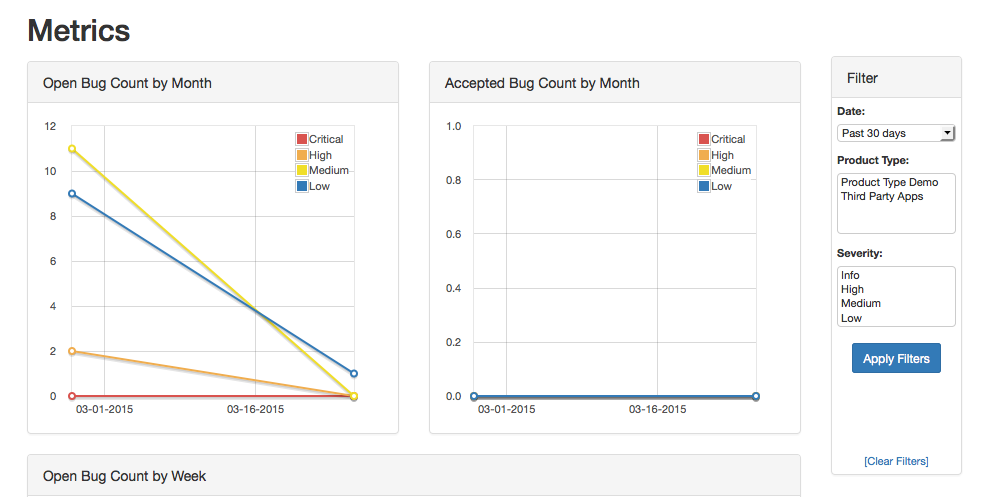

Metrics

Tracking metrics for your Products can help you identify Products that may need additional help, or highlight a particularly effective member of your team.

You can also see the Dashboard view, a page that scrolls automatically, showing off the results of your testing.

This can be useful if you want to display your team’s work in public without showing specific details.

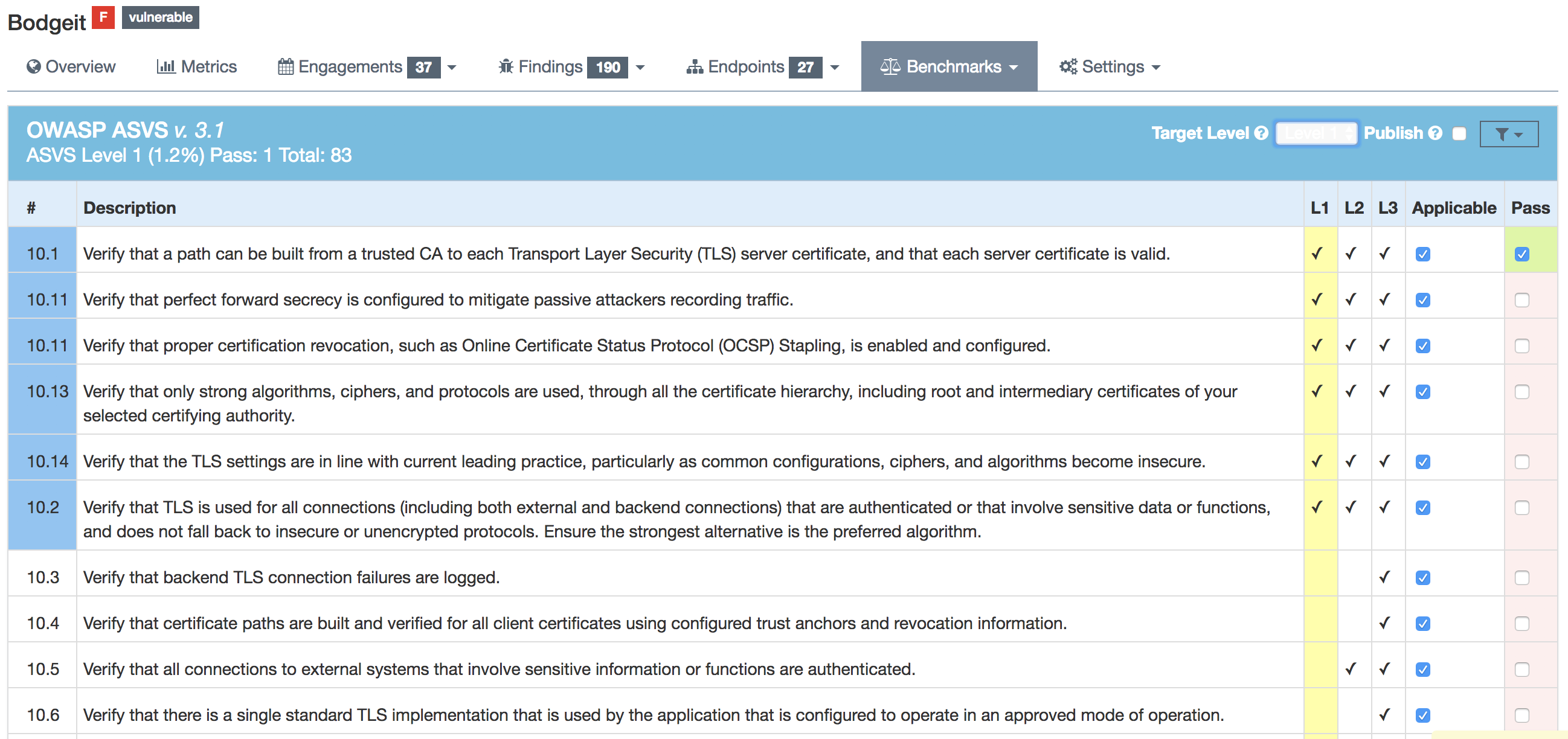

Benchmarks

Team Reviewer utilizes the OWASP ASVS Benchmarks to benchmark a product to ensure the product meets your application technical security controls.

Benchmarks can be defined per the organizations policy for secure development and multiple benchmarks can be applied to a product.

Benchmarks are available from the Product view.

In the Benchmarks view for each product, the default level is ASVS Level 1., but can be changed to the desired ASVS level (Level 1, Level 2 or Level 3).

Further, it will display the ASVS score on the product page and this will be applied to reporting.

On the left hand side the ASVS score is displayed with the desired score, the % of benchmarks passed to achieve the score and the total enabled benchmarks for that AVSV level.

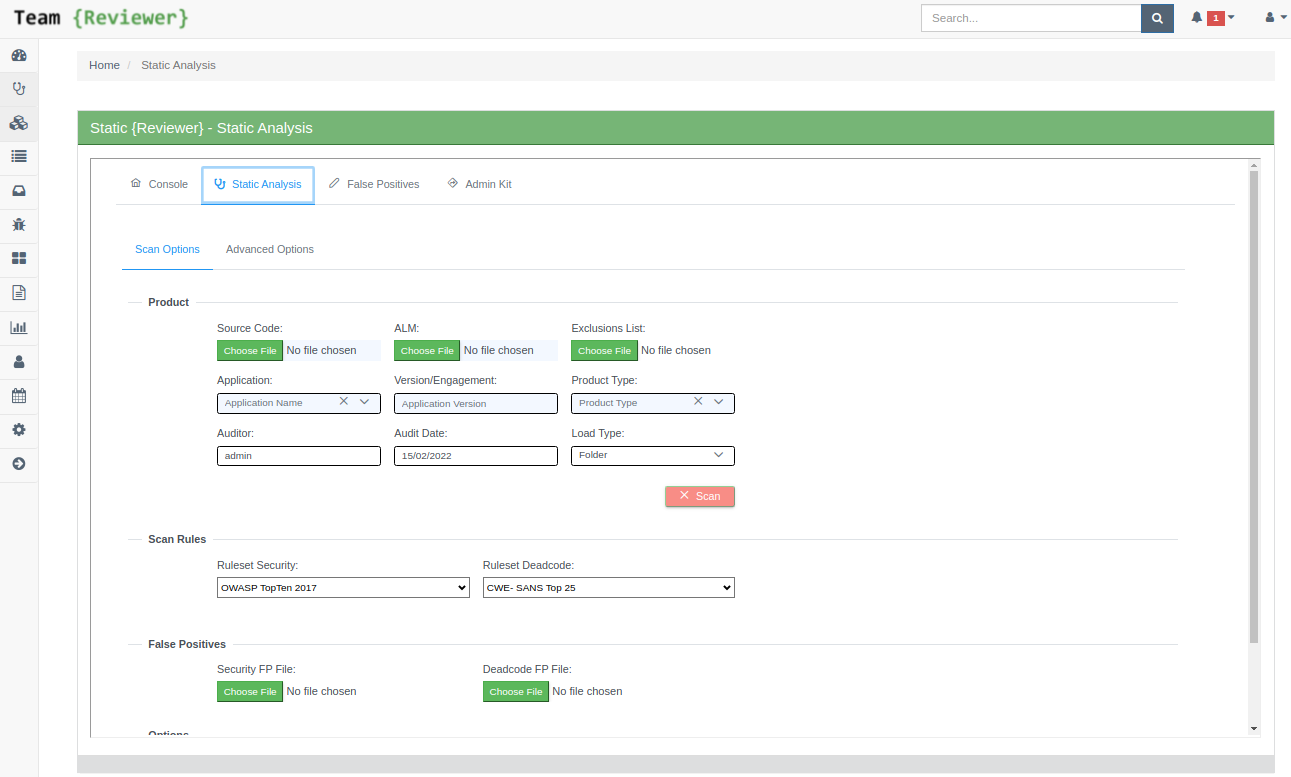

Static Server Plugin

Static Server Plugin for Team Reviewer (to be purchased separately) is able to run Static Analyses over a Source Code Folder, directly from Team Reviewer.

You can do:

Static Analyses

Mark False Positives

Enable/Disable and change Severity of existing Vulnerability Detection Rules

Add Custom Rules

Declare Recurrent False Positives by Evidence

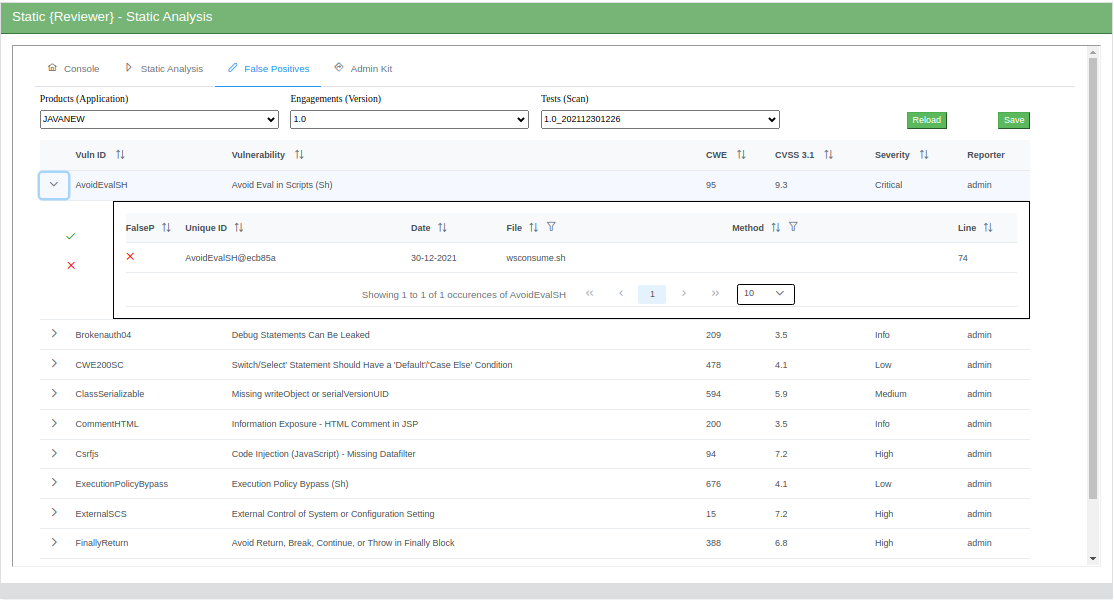

You start Source Code Inspections clicking Static Analysis in the main Dashboard:

The Static Analysis features are the same of Static Reviewer Desktop, but centralized and accessible by any browser:

You can massively mark False Positives using our smart interface:

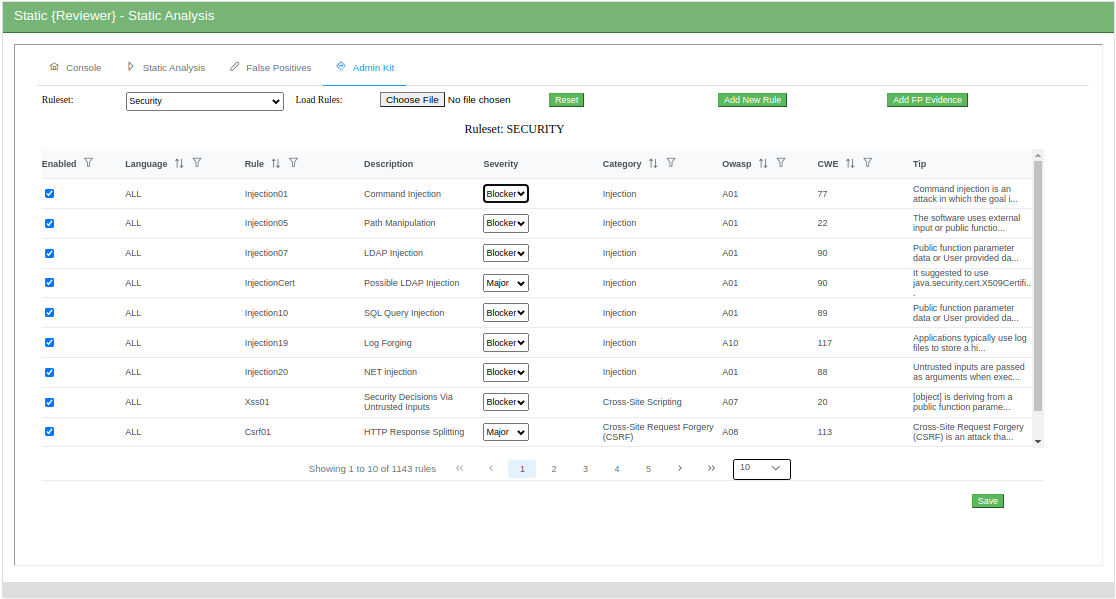

You can Enable/Disable and change Severity of existing Vulnerability Detection Rules (authorized users only):

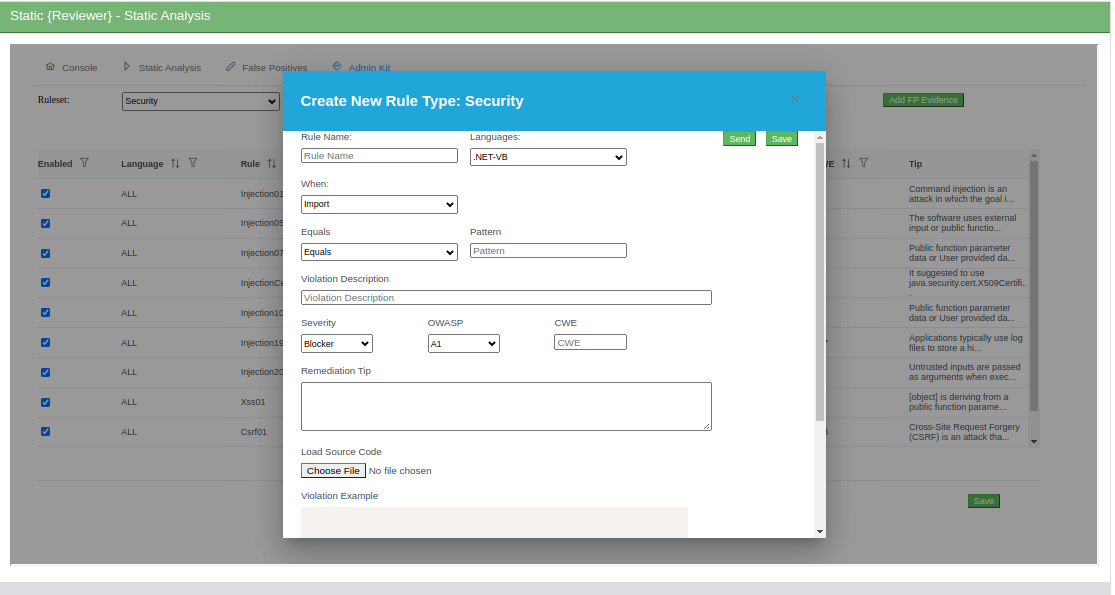

You can create your Custom Rules (authorized users only):

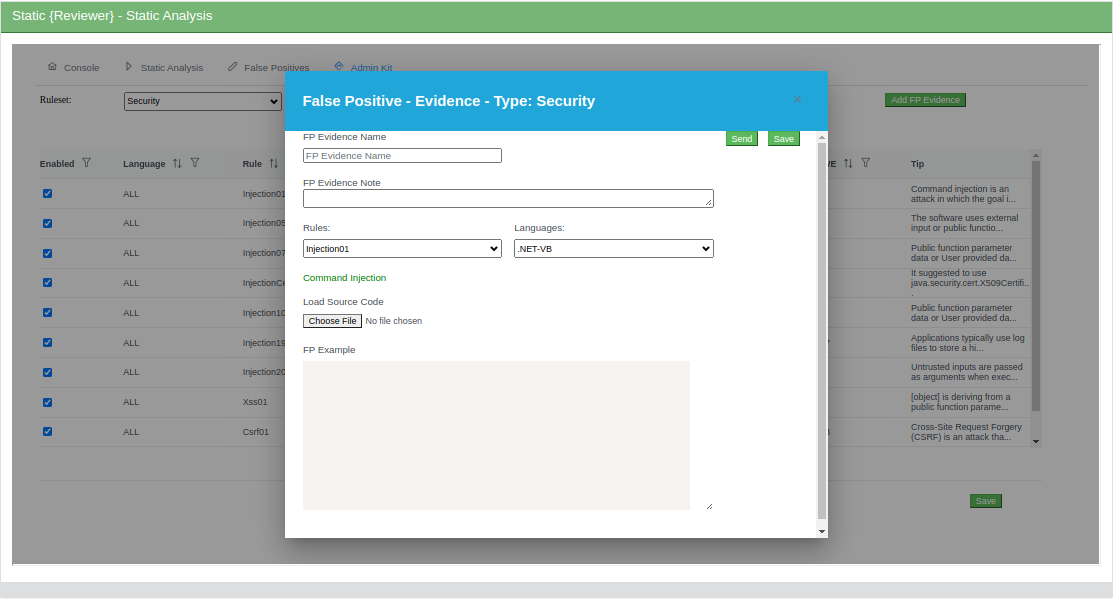

You can declare Recurring False Positives by Evidence (authorized users only):

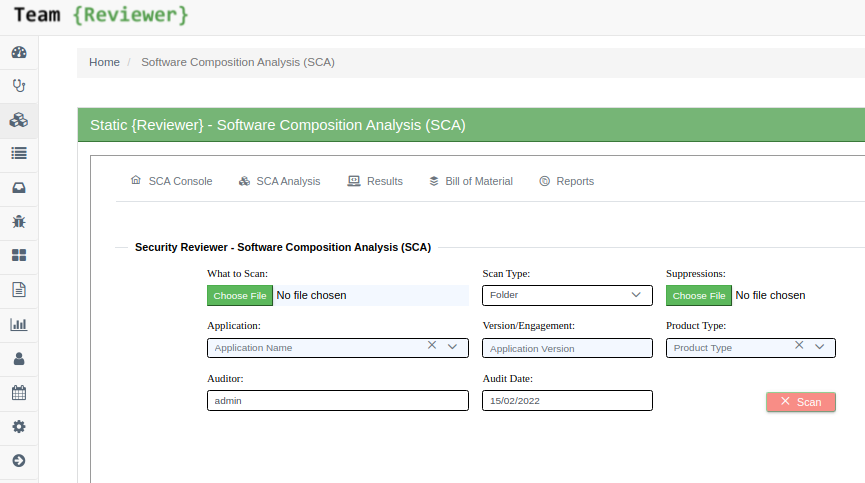

SCA Server Plugin

SCA Server Plugin for Team Reviewer (to be purchased separately) is able to run Software Composition Analyses, directly from Team Reviewer.

You can do:

Software Composition Analysis of a Folder, containing 3-party libraries

Software Composition Analysis of a Container

Software Composition Analysis of a GIT Repository

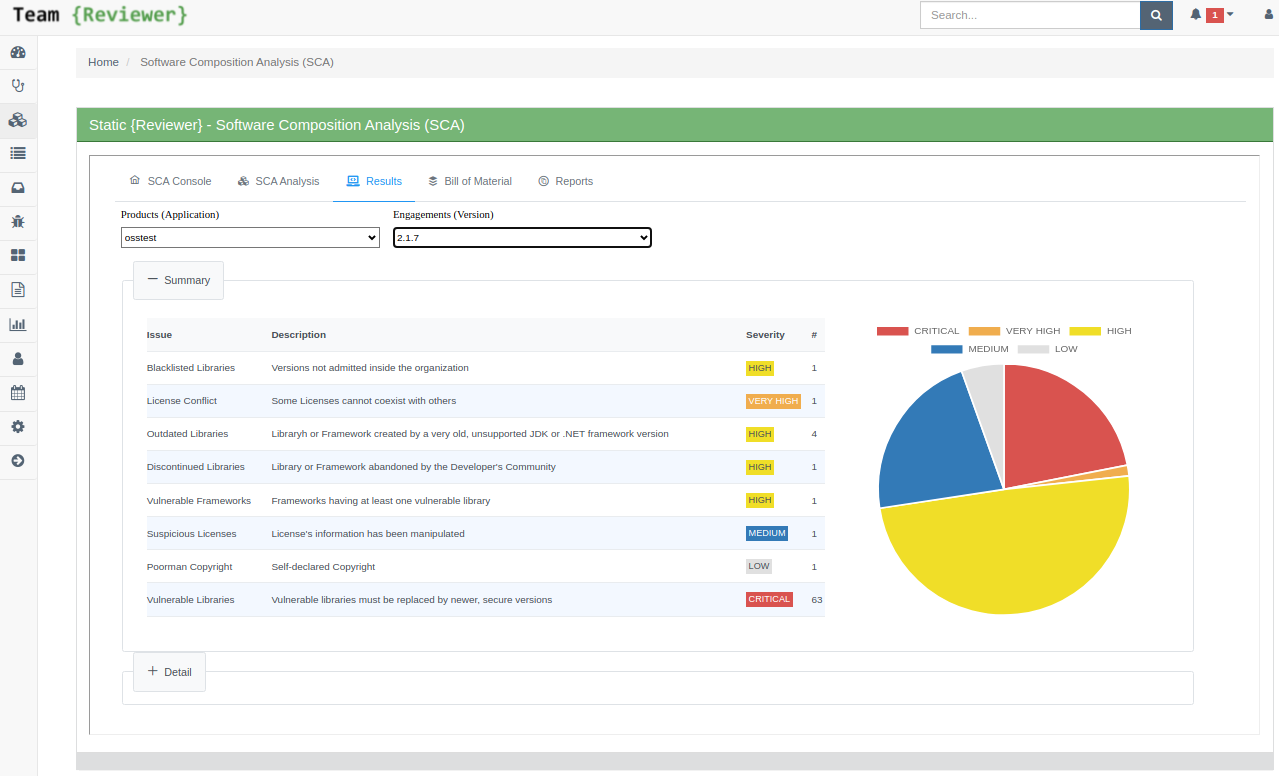

A Software Composition Analysis will discover:

Blacklisted Libraries: Versions not admitted inside the organization

License Conflict: Licenses that cannot coexist with others

Outdated Libraries: Libraries or Frameworks created by a very old, unsupported JDK or .NET Framework version

Discontinued Libraries: Libraries or Frameworks abandoned by the Developer's Community

Vulnerable Frameworks: Frameworks having at least one vulnerable library

Suspicious Licenses: Licenses information has been manipulated

Poor-man Copyright: Self-declared Copyright

Vulnerable Libraries: Vulnerable libraries that must be replaced by newer, secure versions

You start a Software Composition Analysis clicking SCA Analysis in the main Dashboard:

The Software Composition Analysis features are the same of SCA Desktop, but centralized and accessible by any browser:

Once the SCA analysis is terminated you can go to Results page:

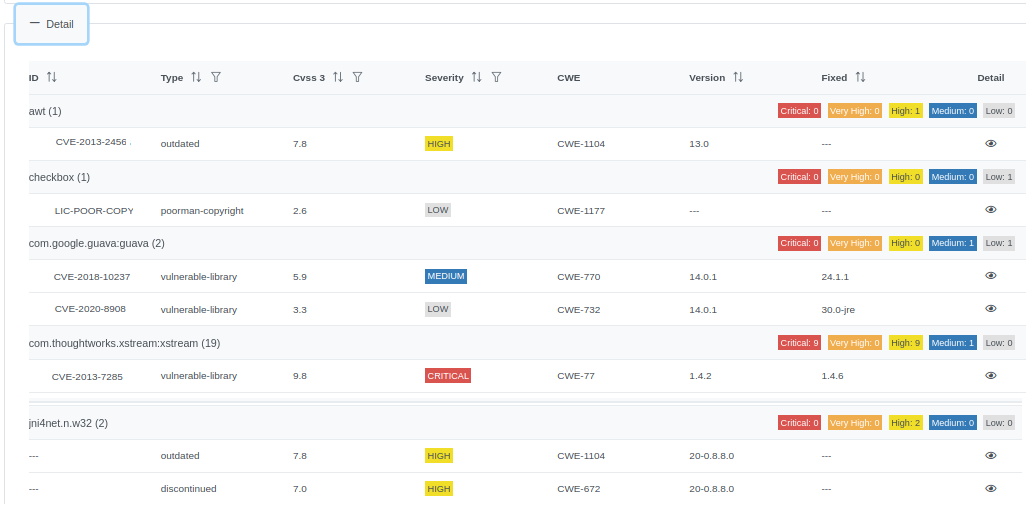

You can drill-down the results:

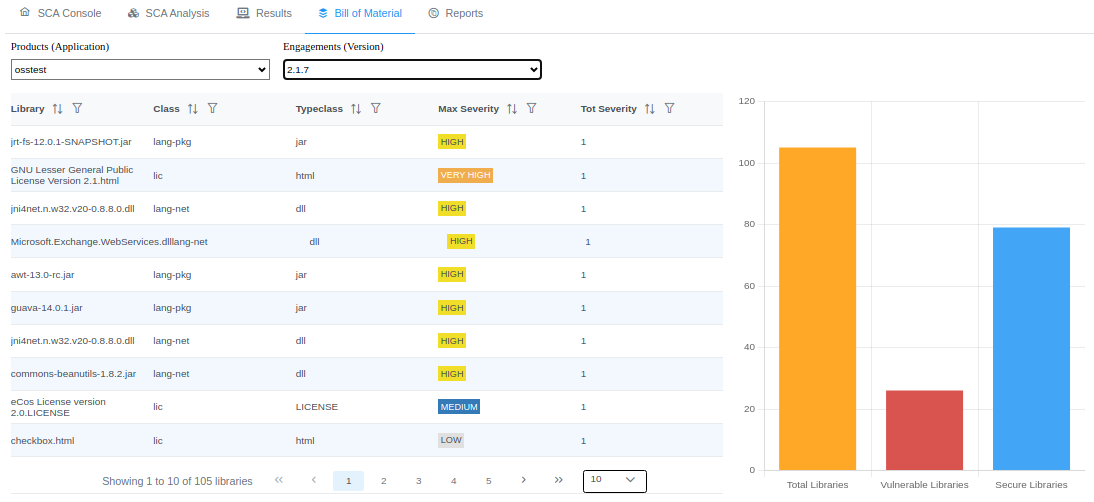

You can view the Software Bill of Materials (SBOM):

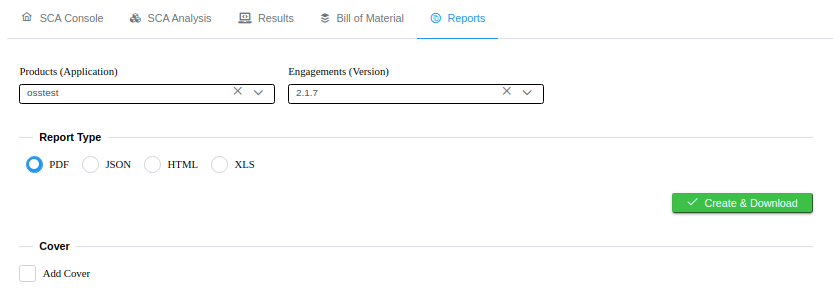

And you can download reports in PDF, Word, Excel and HTML formats:

Additionally, you can have a custom Cover Letter, with your logo, your ISO 9001 Responsability chain, the Confidentiality Level and your DIsclaimer.

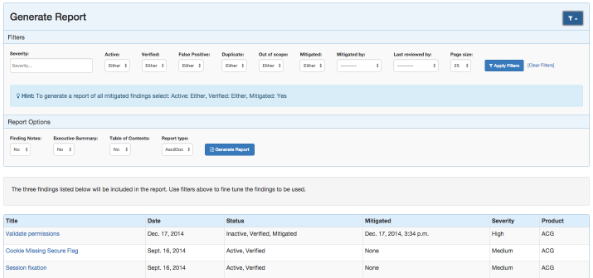

Reports

Team Reviewer stores reports generated with:

Static Reviewer Desktop

Static Reviewer CI/CD plugins for Jenkins and GitLab

SCA Reviewer Destkop

SCA Reviewer CI/CD plugins for Jenkins and GitLab

Dynamic Reviewer

Mobile Reviewer

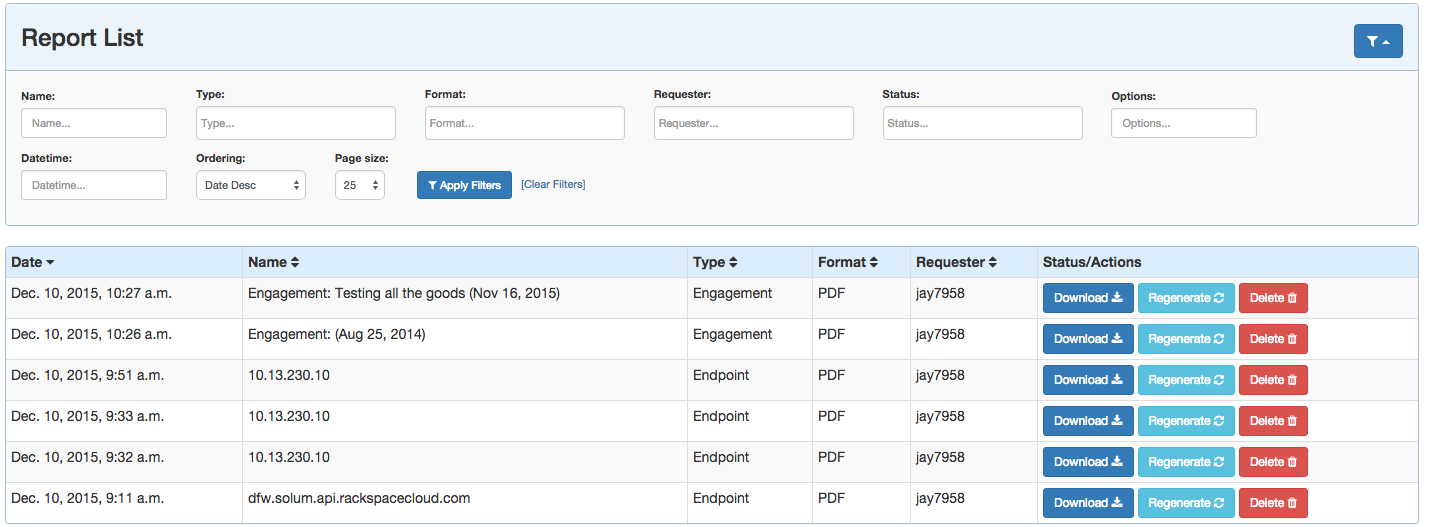

Further, you can create your own custom reports by using Team Reviewer Report Generator.

Team Reviewer custom reports can be generated in Word, Excel, XML, HTML, and AsciiDoc. If you need different formats, open the Word reports and choose Save As…

Reports can be generated for:

Groups of Products

Individual Products

Endpoints

Product Types

Custom Reports

Filtering is available on all Report Generation views to aid in focusing the report for the appropriate need.

Custom reports allow you to select specific components to be added to the report. These include:

Cover Page

Table of Contents

WYSIWYG Content

Findings List

Endpoint List

Page Breaks

The custom report workflow takes advantage of the same asynchronous process described above

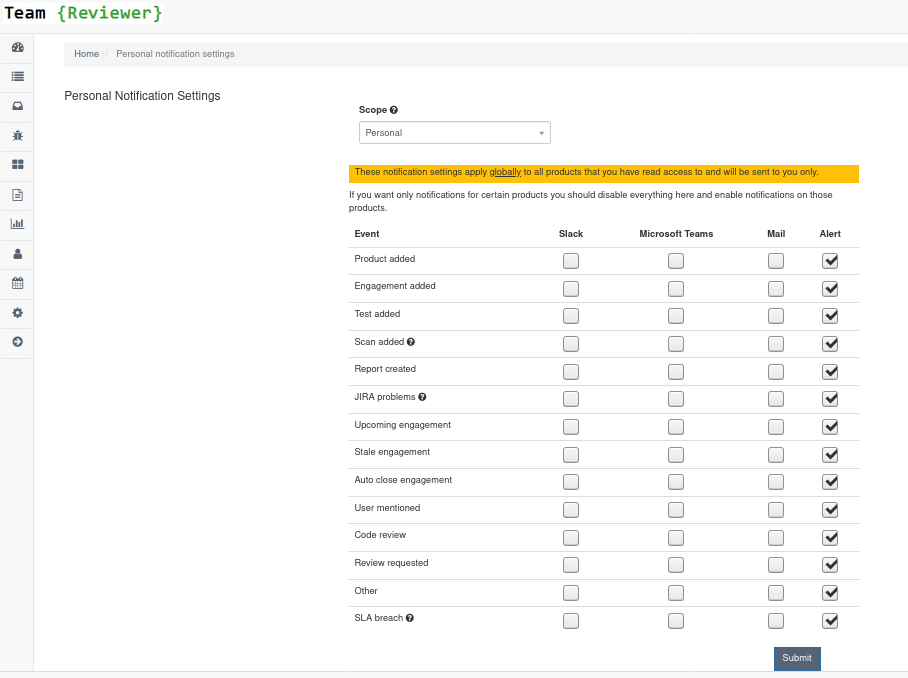

Notifications

Team Reviewer can inform you of different events in a variety of ways. You can be notified about things like an upcoming engagement, when someone mentions you in a comment, a scheduled report has finished generating, and more.

The following notification methods currently exist: - Email - Slack - HipChat - WebHook or Alerts within Team Reviewer

You can set these notifications on a system scope (if you have administrator rights) or on a personal scope. For instance, an administrator might want notifications of all upcoming engagements sent to a certain Slack channel, whereas an individual user wants email notifications to be sent to the user’s specified email address when a report has finished generating.

In order to identify and notify you about things like upcoming engagements, Team Reviewer runs scheduled tasks for this purpose.

Attached Documents

Products, Engagements and Tests permit to attach one or more documents, like Requirements docs, Project Docs, Evidences, Certifications, Risk Acceptances and any correlated docs you need.

It accepts PDF, Word, Excel and Images file formats.

Security Reviewer’s Security, Deadcode-Best Practices, Resilience and SQALE reports are uploaded as Engagement’s Attached Documents to Team Reviewer using REST APIs.

Results Correlation

Team Reviewer can import and correlate results from the following tools:

Static Reviewer, Security Reviewer Software Composition Analysis (SCA), Security Reviewer Software Resilience Analysis (SRA), Mobile Reviewer and Dynamic Reviewer XML or CSV

HCL AppScan Source ed. and Standard ed. detailed XML Report

Micro Focus Fortify SCA and WebInspect FPR

CA Veracode Detailed XML Report

Checkmarx Detailed XML Report

Rapid7 AppSpider Vulnerabilities Summary XML Report and Nexpose XML 2.0

Acunetix

Anchore

AQUA

Arachni Scanner JSON Report

AWS Prowler and Scout2

Bandit

Synopsys BlackDuck

Brakeman

BugCrowd

Contrast

ESLint

GitLab SAST

GitLeaks

GOast

GOSec

HadoLink

HuskyCI

ImmuniWeb

JFrog XRay

Kiuwan

Burp Suite XML

Nessus (CSV, XML)

NetSparker

NExspose

NPMAudit

OpenSCAP

OpenVAS

PHP Symphony Security Check

Nmap (XML), SQLMap, NoSQLMap (text output)

OWASP ZAP XML and Dependency Check XML

Retire.js JavaScript Scan JSON

Node Security Platform JSON

Qualys XML

SonarQube

Sonatype Nexus

SourceClear

SSLScan

SSLlyze

Snyk JSON

Trivy

Trustwave

PyJFuzz

WhiteSource

WpScan

Generic Findings in CSV format

Team Reviewer can export correlated results to the following tools:

SonarQube

Micro Focus Fortify SSC

Kenna Security

ThreadFix

ServiceNow

See our EcoSystem.

Team Reviewer can access to Firmware Reviewer using Single Sign On.

Authentication via LDAP/AD

LDAP (Lightweight Directory Access Protocol) is an Internet protocol that web applications can use to look up information about those users and groups from the LDAP server. You can connect the Team Reviewer to an LDAP directory for authentication, user and group management. Connecting to an LDAP directory server is useful if user groups are stored in a corporate directory. Synchronization with LDAP allows the automatic creation, update and deletion of users and groups in Team Reviewer according to any changes being made in the LDAP directory.

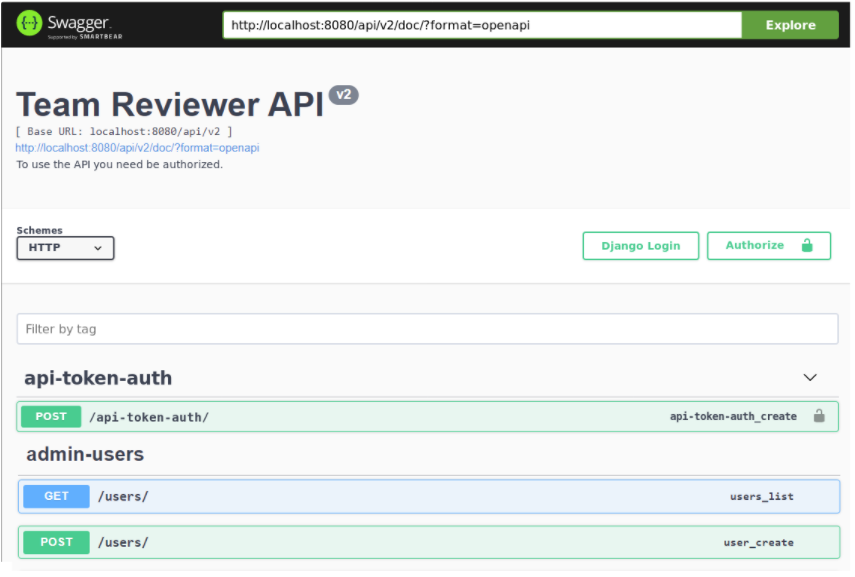

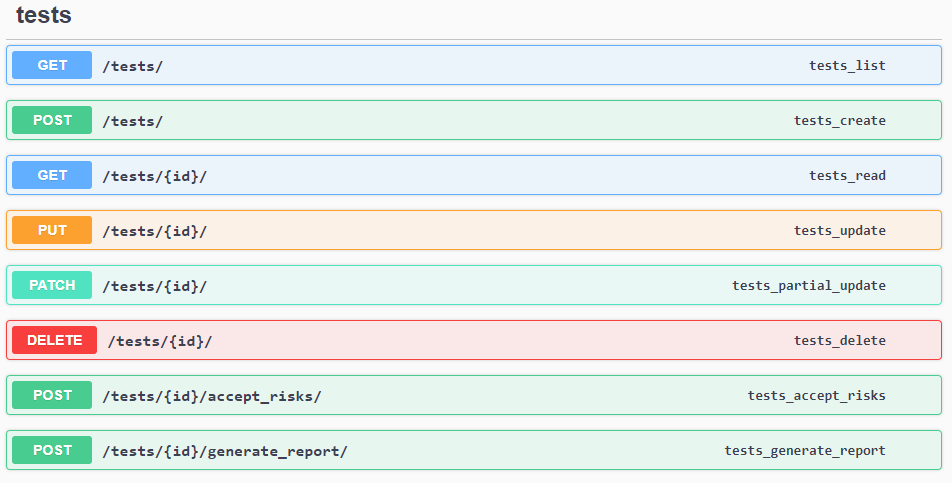

REST API

Team Reviewer is built using a thin server architecture and an API-first design. API’s are simply at the heart of the platform. Every API is fully documented via Swagger 2.0.

The Swagger UI Console can be used to visualize and explore the wide range of possibilities:

Prior to using the REST APIs, an API Key must be generated. By default, creating a Group (Team) will also create a corresponding API key. A Group (Team) may have multiple keys.

Team Reviewer’API API is created using Django Rest Framework. The documentation of each endpoint is available within each Team Reviewer installation at /api/v2/doc/ and can be accessed by choosing the API v2 Docs link on the user drop down menu in the header.

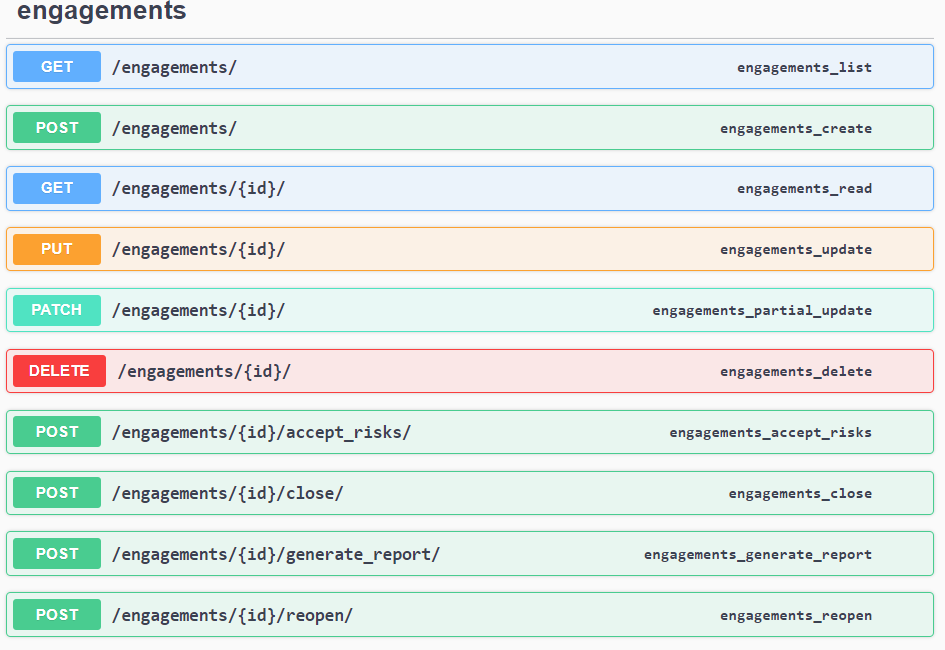

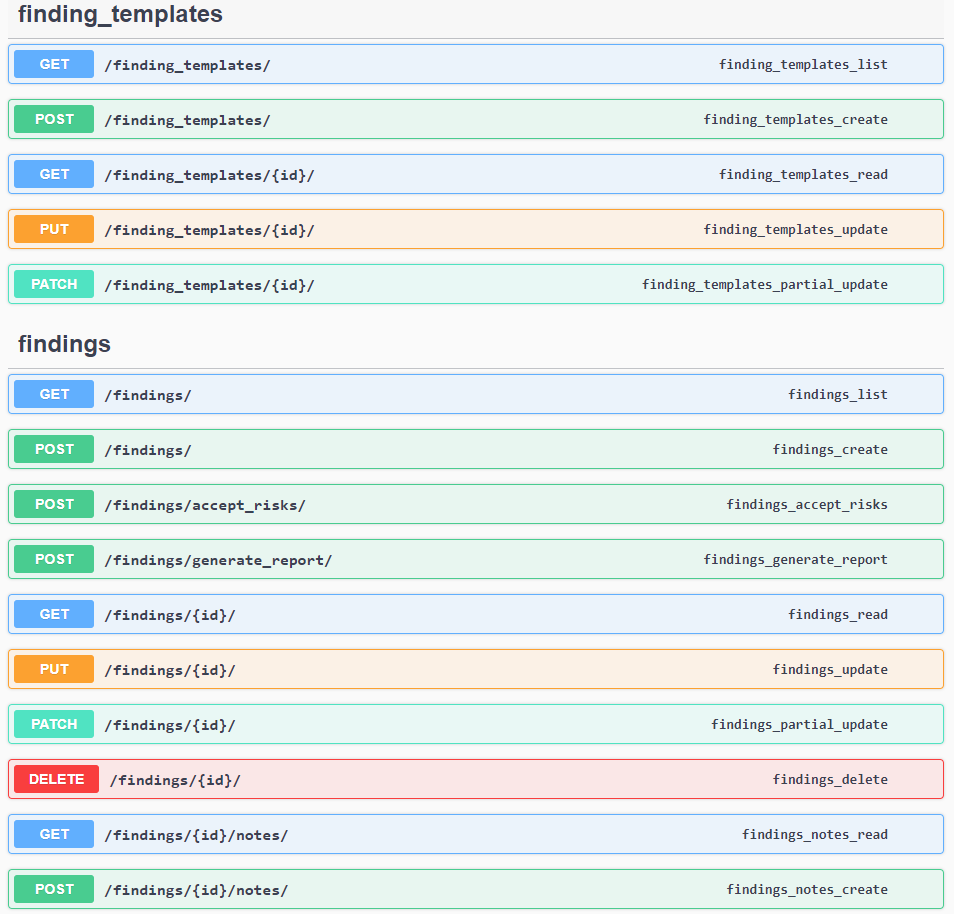

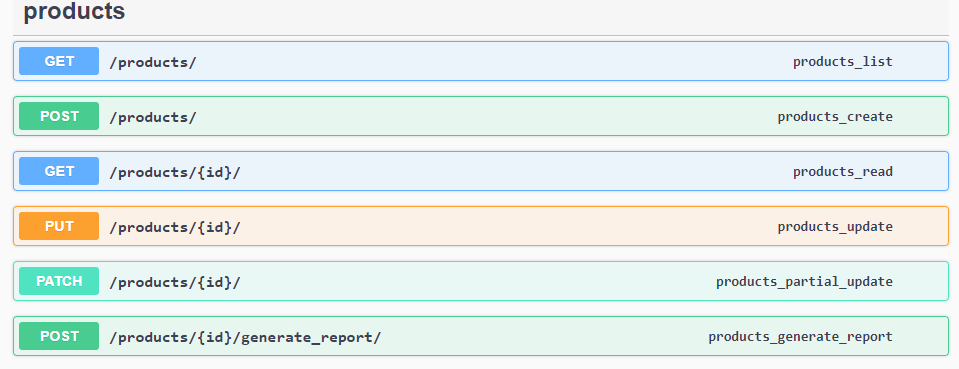

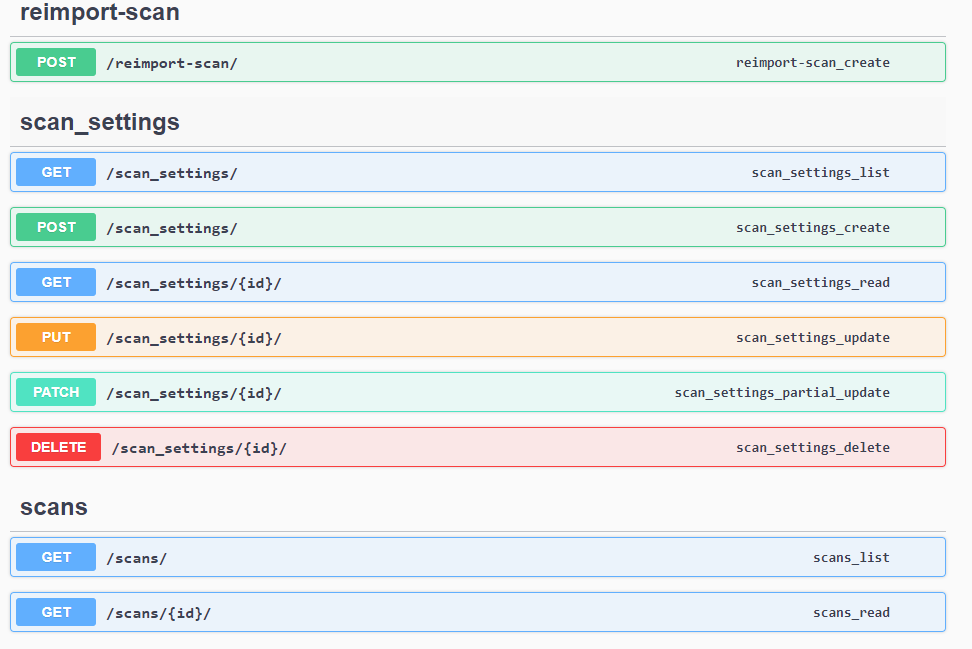

Each of main Swagger element provides different APIs for:

api-token-auth

The API uses header authentication with API key. To interact with the documentation, a valid Authorization header value is needed. If authorized, a user can also create a new API Authorization token.

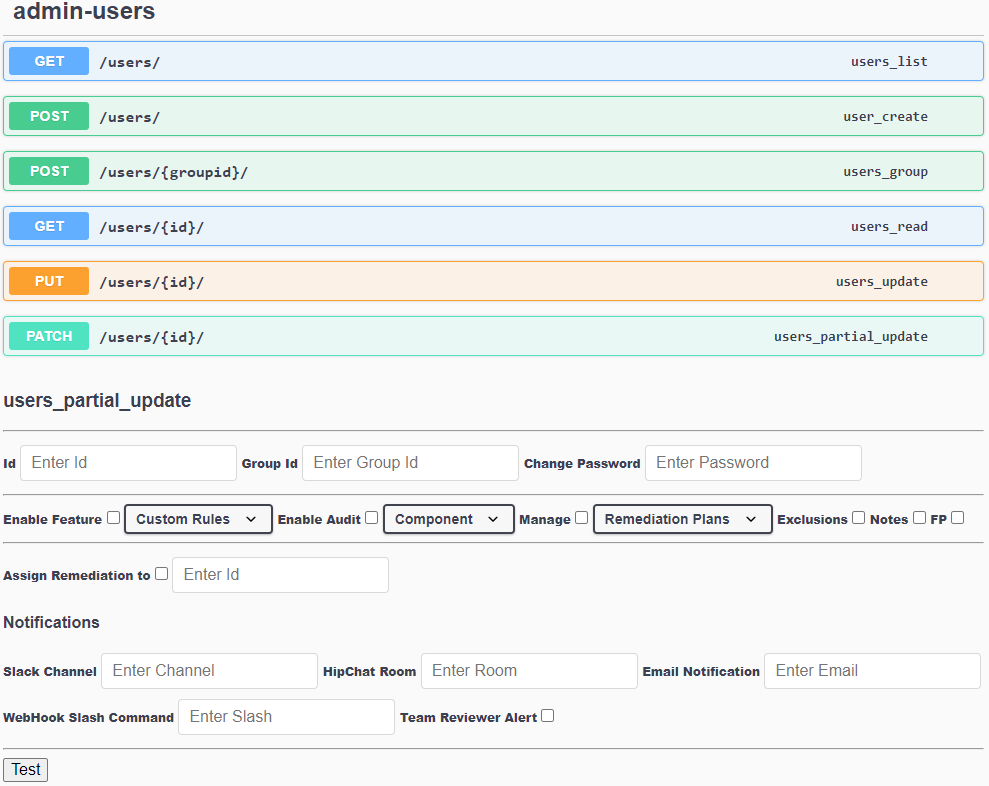

admin-users

This API requires an admin-level token to run, and HTTPS connection. It handles users, in term of:

Users List

User Creation. Create Users with first Password and associate Users to at least one Group (Team). Groups must be created with Users Partial Update request. User create, delete, modify or suspend

Group (Team) create, modify or delete

Groups assign to Users, with quick User remove

User Update

Users Partial Update. It has plenty of parameters to modify the following user’s Attributes:

Attributes

Password settings (policy, expiration, change password)

Enabling/Disabling Users or Groups feature-by-feature

Enabling/Disabling Users or Groups to Audit analysis, Per Product, Per Component or Per Scan

Enabling/Disabling Users or Group to assign vulnerability remediations to other Users or Groups, Per Product, Per Component, Per Vulnerability (Finding) Category, Per Single Vulnerability (Finding) or Per Scan

Enabling/Disabling Users or Groups to Manage/Monitor Remediation plans Per Product, Per Component, Per Vulnerability (Finding) Category, Per Single Vulnerability (Finding) or Per Scan

Enabling/Disabling Users or Groups to Manage Custom Rules

Enabling/Disabling Users or Groups to create/modify/delete Vulnerability (Finding) Exclusions, Notes and False Positives

Enabling/Disabling Users or Groups to create, modify or delete Components

Adding/Remove Users to/from Admin role, for setting above permissions

Enabling/Disabling Notification for a user/group using Slack, HipChat, Mail, WebHooks or Team Reviewer Alerts for: Product/Engagement/Test/Results/Report added, Jira Update, Upcoming engagement, User mentioned, Code Review involvement, Component Review requested. Notification other than the above can be achieved by WebHooks.

Multiple Users Partial Updates can be done to set more Attributes.

endpoints

To be used for DAST and IAST.

Currently the following endpoints are available:

Engagements (Start, Suspend, Delete and Close an Audit)

Findings (Vulnerability Management APIs)

Products (Application Groups)

Scan Settings

Scans

Tests/ Reports

Further, a bunch of additional APIs are available, like: Development Environments, Tools/Plugin Configuration, Jira Configurations, Metadata, Systems Settings, Technologies, Views

Last, there is a Command API for: System Status, Start/Restart/Stop/Suspend scan tasks, Execute Queries. This API requires an admin-level token.

TOOL INTEROPERABILITY STANDARDS

Team Reviewer supports the following Tool Interoperability Standards:

FPF

SCARF

SARIF

CEF, LEEF and SysLog

FPF

Team Reviewer has a native format that can be used to share findings with other systems. The findings contain identical information as presented while auditing, but also include information about the project and the system that created the file. The file type is called Finding Packaging Format (FPF).

FPF’s are json files and have the following sections:

Name | Type | Description |

|---|---|---|

version | string | The Finding Packaging Format document version |

meta | object | Describes the Dependency-Track instance that created the file |

project | object | The project the findings are associated with |

findings | array | An array of zero or more findings |

SCARF

We adopted a unified tool output reporting format, called the SWAMP Common Assessment Results Format (SCARF). This format makes it much easier for a tool results viewer to display the output from a given tool. As a result, we have fostered interoperability

among commercial and open source tools. The SCARF framework includes open source libraries in a variety of languages to produce SCARF and process SCARF. In addition, we have produced open source result parsers that translate the output of all the SCARF-based tools to SCARF. We continue to work towards tool interoperability standards by joining the Static Analysis Results Interchange Format (SARIF) Technical Committee. As a participating member, we contribute to creating a standardized, open source static analysis tool format to be adopted by all static analysis tool developers.

You can use SCARF Framework yourself using the libraries:

Available libraries | XML | JSON |

|---|---|---|

Perl | ||

Python | ||

C/C++ | ||

Java |

SARIF

We are also compliant to OASIS SARIF (Static Analysis Results Interchange Format). Some SDK are available:

.NET SARIF SDK

Java Lycan

Other SARIF Interfaces

CEF-LEEF-SysLog

Common Event Format (CEF) and Log Event Extended Format (LEEF) are open standard SysLog formats for log management and interoperabily of security related information from different devices, network appliances and applications.

We use those formats for output-only, to export Team Reviewer correlated results to a number of SIEM tools, like:

Micro Focus ArcSight

IBM QRadar

Splunk

Exabeam

Securonix UEBA

LogRhythm

STG RSA NetWitness

Rapid7 InsightDR

LogPoint

McAfee Enterprise Security

They are Logging and Auditing file formats and are extensible, text-based formats designed to support multiple device types by offering the most relevant information.

CEF Field Definitions

Field | Definition |

|---|---|

Version | An integer that identifies the version of the CEF format. This information is used to determine what the following fields represent. Example: 0 |

Device Vendor Device Product Device Version | Strings that uniquely identify the type of sending device. No two products Dec use the same device-vendor and device-product pair, although there is no central authority that manages these pairs. Be sure to assign unique name pairs. Example: JATP|Cortex|3.6.0.12 |

Signature ID/ Event Class ID | A unique identifier in CEF format that identifies the event-type. This can be a string or an integer. The Event Class ID identifies the type of event reported. Example (one of these types): http |email| cnc| submission| exploit| datatheft |

Malware Name | A string indicating the malware name. Example: TROJAN_FAREIT.DC |

Severity/Incident Risk Mapping | An integer that reflects the severity of the event. For the Juniper ATP Appliance CEF, the severity value is an incident risk mapping range from 0-10 Example: 9. |

External ID | The Juniper ATP Appliance incident number. Example: externalId=1003 |

Event ID | The Juniper ATP Appliance Event ID number. Example: eventId=13405 |

Extension | A collection of key-value pairs; the keys are part of a predefined set. An event can contain any number of key- value pairs in any order, separated by spaces. Note: Review the definitions for these extension field labels provided in the section: CEF Extension Field Key=Value Pair Definitions. |

LEEF also has predefined attributes.

Developers

Team Reviewer provides other unique capabilities specifically designed for Software Developers.

Reduce Vulnerabilities and Weaknesses, and Increase Quality. Software developers can use the our tools in to assess their software for weaknesses and fix these problems before releasing their software. Eliminating security and quality issues early in the development process reduces development costs and increases the return on investment (ROI), whereas, fixing a bug or security issue after a release reduces the ROI and could potentially lead to a negative reputation.

Simplify the Application of Software Assurance Tools. There are large human costs associated with selecting, acquiring, installing, configuring, maintaining, and integrating a software assurance tool into the development process. These costs can increase exponentially when using multiple tools. Using Team Reviewer eliminates this overhead, as Team Reviewer providers manage the tools, and automates the application of the tools. A software package developer simply makes software available for assessment in Team Reviewer and then selects the desired tools for the analysis. Results from multiple tools can be displayed concurrently using Team Reviewer results viewer.

Enable Continuous Software Assurance. Team Reviewer supports continuous software assurance for developers by scheduling software package assessments on a recurring basis, for example, nightly. Before each assessment begins, the current version of the software package is associated with the assessment run and assessed using a pre-configured set of tools. Users can quickly check the status of their upcoming, ongoing, and completed assessments along with results of successfully completed assessments. Users can also choose to be notified via email when an assessment run finishes. By comparing results from one assessment to another, the software package developer can easily detect regressions or improvements between versions.

Infrastructure Managers

Infrastructure managers bring new technologies into their organizations. Increasingly, this means incorporating open-source software into a networked environment where bugs, defects, or vulnerabilities can create a window of opportunity for unintentional and malicious attacks. Assessing the quality and security of software before it is deployed is a critical step in reducing security risks. Infrastructure managers can use Team Reviewer as an evaluative tool before deploying new technologies or to assess existing software packages for security problems prior to being released.

Since Team Reviewer supports the selection of multiple software analysis tools and simultaneous assessments, infrastructure managers could experience significant time savings. The human cost to conducting software assurance is the effort required to select, acquire, install, configure, maintain, and run these tools on the software prior to deployment. Team Reviewer manages most of these tasks, making it possible for infrastructure managers to simply view the results of software that others assessed in Team Reviewer. Team Reviewer lowers the costs of software assurance, increasing the return on investment.

Team Reviewer offers other incentives for infrastructure operations.

Help Manage Risks Associated with Deployed Software. Infrastructure managers can evaluate the risks of using certain software by using the results of software assurance tools to determine the software’s security and quality. Results from Team Reviewer can also provide metrics to encourage software suppliers to improve the quality and security of their software.

Leverage Community Input to Improve Software Quality. Commonly deployed software can be assessed by the software developer or user community. Team Reviewer gives outside developers the capability to test open-source code prior to incorporating it into their own code.

Improve Visibility to Changes in Deployed Software. Continuous software assurance is the automated, repeated assessment of software by software assurance tools. As new tools are added to Team Reviewer, deployed software will be analyzed with improved rigor, identifying potential problems that need to be addressed by the software provider. As new versions of software are released, Team Reviewer will quickly identify changes in deployed software that will better inform infrastructure managers about key areas of interest impacting their organization.

Machine Learning

Rather than doing specific pattern-matching or detonating a file, machine learning, emdedded in Team Reviewer, parses the file and extracts thousands of features. These features are run through a classifier, also called a feature vector, to identify if the file is good or bad based on known identifiers. Rather than looking for something specific, if a feature of the file behaves like any previously assessed cluster of files, the machine will mark that file as part of the cluster. For good machine learning, training sets of good and bad verdicts is required, and adding new data or features will improve the process and reduce false positive rates.

Machine learning compensates for what dynamic and static analysis lack. A sample that is inert, doesn’t detonate, is crippled by a packer, has command and control down, or is not reliable can still be identified as malicious with machine learning. If numerous versions of a given threat have been seen and clustered together, and a sample has features like those in the cluster, the machine will assume the sample belongs to the cluster and mark it as malicious in seconds.

Only Able to Find More of What Is Already Known

Like the other two methods, machine learning should be looked at as a tool with many advantages, but also some disadvantages. Namely, machine learning trains the model based on only known identifiers. Unlike dynamic analysis, machine learning will never find anything truly original or unknown. If it comes across a threat that looks nothing like anything its seen before, the machine will not flag it, as it is only trained to find more of what is already known.

Layered Techniques in a Platform

To thwart whatever advanced adversaries can throw at you, you need more than one piece of the puzzle. You need layered techniques – a concept that used to be a multi-vendor solution. While defense in depth is still appropriate and relevant, it needs to progress beyond multi-vendor point solutions to a platform that integrates static analysis, dynamic analysis and machine learning. All three working together can actualize defense in depth through layers of integrated solutions.

DISCLAIMER: Due we make use of opensource third-party components, we do not sell the product, but we offer a yearly subscription-based Commercial Support to selected Customers.

Team Reviewer is based on open source software developed by Aaron Weaver (OWASP Defect Dojo Project)

COPYRIGHT (C) 2014-2022 SECURITY REVIEWER SRL. ALL RIGHTS RESERVED.